About this document

This document contains the course notes for Graph Theory and

Additive Combinatorics, a graduate-level course taught by Prof.

Yufei Zhao at MIT in Fall 2019.

The notes were written by the students of the class based on the

lectures, and edited with the help of the professor.

The notes have not been thoroughly checked for accuracy, espe-

cially attributions of results. They are intended to serve as study

resources and not as a substitute for professionally prepared publica-

tions. We apologize for any inadvertent inaccuracies or misrepresen-

tations.

More information about the course, including problem sets and

lecture videos (to appear), can be found on the course website:

http://yufeizhao.com/gtac/

Contents

A guide to editing this document 7

1 Introduction 13

1.1 Schur’s theorem . . . . . . . . . . . . . . . . . . . . . . . . 13

1.2 Highlights from additive combinatorics . . . . . . . . . . 15

1.3 What’s next? . . . . . . . . . . . . . . . . . . . . . . . . . . 18

I Graph theory 21

2 Forbidding subgraphs 23

2.1 Mantel’s theorem: forbidding a triangle . . . . . . . . . . 23

2.2 Turán’s theorem: forbidding a clique . . . . . . . . . . . . 24

2.3 Hypergraph Turán problem . . . . . . . . . . . . . . . . . 26

2.4 Erd˝os–Stone–Simonovits theorem (statement): forbidding

a general subgraph . . . . . . . . . . . . . . . . . . . . . . 27

2.5 K˝ovári–Sós–Turán theorem: forbidding a complete bipar-

tite graph . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.6 Lower bounds: randomized constructions . . . . . . . . . 31

2.7 Lower bounds: algebraic constructions . . . . . . . . . . 34

2.8 Lower bounds: randomized algebraic constructions . . . 37

2.9 Forbidding a sparse bipartite graph . . . . . . . . . . . . 40

3 Szemerédi’s regularity lemma 49

3.1 Statement and proof . . . . . . . . . . . . . . . . . . . . . 49

3.2 Triangle counting and removal lemmas . . . . . . . . . . 53

3.3 Roth’s theorem . . . . . . . . . . . . . . . . . . . . . . . . 58

3.4 Constructing sets without 3-term arithmetic progressions 59

3.5 Graph embedding, counting and removal lemmas . . . . 61

3.6 Induced graph removal lemma . . . . . . . . . . . . . . . 65

3.7 Property testing . . . . . . . . . . . . . . . . . . . . . . . . 69

3.8 Hypergraph removal lemma . . . . . . . . . . . . . . . . . 70

3.9 Hypergraph regularity . . . . . . . . . . . . . . . . . . . . 71

3.10 Spectral proof of Szemerédi regularity lemma . . . . . . 74

4

4 Pseudorandom graphs 77

4.1 Quasirandom graphs . . . . . . . . . . . . . . . . . . . . . 77

4.2 Expander mixing lemma . . . . . . . . . . . . . . . . . . . 82

4.3 Quasirandom Cayley graphs . . . . . . . . . . . . . . . . 84

4.4 Alon–Boppana bound . . . . . . . . . . . . . . . . . . . . 86

4.5 Ramanujan graphs . . . . . . . . . . . . . . . . . . . . . . 88

4.6 Sparse graph regularity and the Green–Tao theorem . . 89

5 Graph limits 95

5.1 Introduction and statements of main results . . . . . . . 95

5.2 W-random graphs . . . . . . . . . . . . . . . . . . . . . . . 99

5.3 Regularity and counting lemmas . . . . . . . . . . . . . . 100

5.4 Compactness of the space of graphons . . . . . . . . . . . 103

5.5 Applications of compactness . . . . . . . . . . . . . . . . 106

5.6 Inequalities between subgraph densities . . . . . . . . . . 110

II Additive combinatorics 119

6 Roth’s theorem 121

6.1 Roth’s theorem in finite fields . . . . . . . . . . . . . . . . 121

6.2 Roth’s proof of Roth’s theorem in the integers . . . . . . 126

6.3 The polynomial method proof of Roth’s theorem in the fi-

nite field model . . . . . . . . . . . . . . . . . . . . . . . . 132

6.4 Roth’s theorem with popular differences . . . . . . . . . 137

7 Structure of set addition 141

7.1 Structure of sets with small doubling . . . . . . . . . . . 141

7.2 Plünnecke–Ruzsa inequality . . . . . . . . . . . . . . . . . 144

7.3 Freiman’s theorem over finite fields . . . . . . . . . . . . 147

7.4 Freiman homomorphisms . . . . . . . . . . . . . . . . . . 149

7.5 Modeling lemma . . . . . . . . . . . . . . . . . . . . . . . 150

7.6 Bogolyubov’s lemma . . . . . . . . . . . . . . . . . . . . . 153

7.7 Geometry of numbers . . . . . . . . . . . . . . . . . . . . 156

7.8 Proof of Freiman’s theorem . . . . . . . . . . . . . . . . . 158

7.9 Freiman’s theorem for general abelian groups . . . . . . 160

7.10 The Freiman problem in nonabelian groups . . . . . . . . 161

7.11 Polynomial Freiman–Ruzsa conjecture . . . . . . . . . . . 163

7.12 Additive energy and the Balog–Szémeredi–Gowers theo-

rem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165

8 The sum-product problem 171

8.1 Crossing number inequality . . . . . . . . . . . . . . . . . 171

8.2 Incidence geometry . . . . . . . . . . . . . . . . . . . . . . 172

8.3 Sum-product via multiplicative energy . . . . . . . . . . 174

Sign-up sheet

Please sign up here for writing lecture notes. Some lectures can be covered by two students working in

collaboration, depending on class enrollment. Please coordinate among yourselves.

When editing this page, follow your name by your MIT email formatted using \email{[email protected]}.

8. 10/2: Lingxian Zhang l

_

A guide to editing this document

Please read this section carefully.

Expectations and timeline

Everyone enrolled in the course for credit should sign up to write

notes for a lecture (possibly pairing up depending on enrollment) by

editing the signup.tex file.

Please sign up on Overleaf using your real name (so that we can see

who is editing what). You can gain read/write access to these files

from the URL I emailed to the class or by accessing the link in Stellar.

The URL from the course website does not allow editing.

All class participants are expected and encouraged to contribute to

editing the notes for typos and general improvements.

Responsibilities for writers

By the end of the day after the lecture, you should put i.e., by Tuesday night for Monday lec-

tures and Thursday night for Wednes-

day lectures

up a rough draft of the lecture notes that should, at the minimum,

include all theorem statements as well as bare bone outlines of proofs

discussed in lecture. This will be helpful for the note-takers of the

following lecture.

Within four days of the lecture, you should complete a pol- i.e., by Friday for Monday lectures and

Sunday for Wednesday lectures

ished version of the lecture notes with a quality of exposition similar

to that of the first chapter, including discussions, figures (wherever

helpful), and bibliographic references. Please follow the style guide

below for consistency.

Please note that the written notes are supposed to more than sim-

ply a transcript of what was written on the blackboard. It is impor-

tant to include discussions and motivations, and have ample “bridge”

paragraphs connecting statements of definitions, theorems, proofs,

etc.

8

(please cc your coauthor) to set up a 30-min appointment to go over

your writing. Let me know when you will be available in the upcom-

ing three days.

At the appointment, ideally within a week of the lecture, please

bring a printed copy containing the pages of your writing, and we

will go over the notes together for comments. After our one-on-one

meeting, you are expected to edit the notes according to feedback

as soon as possible while your memory is still fresh, and complete

the revision within three days of our meeting. Please email me again

when your revision is complete. If the comments are not satisfactorily

addressed, then we may need to set up additional appointments,

which is not ideal.

L

A

T

E

X style guide

Please follow these styles when editing this document. Use lec1.tex

as an example.

Always make sure that this document compiles without errors!

Files Start a new file lec#.tex for each lecture and add \input{lec#}

to the main file. Begin the file with the lecture date and your name(s)

using the following command. If the file starts a new chapter or sec-

tion, then insert the following line right after the \begin{section} or

\begin{chapter} or else the label will appear at the wrong location.

\dateauthor{9/9}{Yufei Zhao} 9/9: Yufei Zhao

English Please use good English and write complete sentences.

Never use informal shorthand “blackboard” notation such as ⇒, ∃,

and ∀ in formal writing (unless you are actually writing about math-

ematical logic, which we will not do here). Avoid abbreviations such

as iff and s.t.. Avoid beginning a sentence with math or numbers.

This is a book Treat this document as a book. Do not refer to “lec-

tures.” Do not say “last lecture we . . . .” Do not repeat theorems

carried between consecutive lectures. Instead, label theorems and

refer to them using \cref. You may need to coordinate with your

classmates who wrote up earlier lectures.

As you may have guessed, the goal is to eventually turn this docu-

ment into a textbook. I thank you in advance for your contributions.

Theorems Use Theorem for major standalone results (even if the

result is colloquially known as a “lemma”, such as the “triangle re-

9

moval lemma”), Proposition for standalone results of lesser impor-

tance, Corollary for direct consequences of earlier statements, and

Lemma for statements whose primary purpose is to serve as a step in

a larger proof but otherwise do not have major independent interest.

Always completely state all hypotheses in theorems, lemmas,

etc. Do not assume that the “standing assumptions” are somehow

understood to carry into the theorem statement.

Example for how to typeset a theorem:

\begin{theorem}[Roth’s theorem]

\label{thm:roth-guide}

\citemr{Roth (1953)}{51853}

Every subset of the integers with positive upper density

contains a 3-term arithmetic progression.

\end{theorem}

Theorem 0.1 (Roth’s theorem). Every subset of the integers with positive Roth (1953)

upper density contains a 3-term arithmetic progression.

If the result has a colloquial name, include the name in square

brackets “[...]” immediately following \begin{theorem} (do not

insert other text in between).

Proofs If the proof of a theorem follows immediately after its state-

ment, use:

\begin{proof} ...\end{proof}

Or, if the proof does not follow immediately after the theorem state-

ment, then use:

\begin{proof}[Proof of \cref{thm:XYZ}] ...\end{proof}

Emph Use \emph{...} to highlight new terms being defined, or

other important text, so that they can show up like this. If you

simply wish to italicize or bold some text, use \textit{...} and

\textbf{...} instead.

Labels Label your theorems, equations, tables, etc. according to the

conventions in Table 1. Use short and descriptive labels. Do not use

space or underscore ‘

_

’ in labels (dashes ‘-’ are encouraged). Labels

will show up in the PDF in blue.

Example of a good label: \label{thm:K3-rem}

Example of a bad label: \label{triangle removal lemma}

Use \cref... (from the cleveref package) to cite a theorem so

that you do not have to write the words Theorem, Lemma, etc. E.g.,

Now we prove \cref{thm:roth-guide}.

produces

Now we prove Theorem 0.1.

10

Type Command Label

Theorem \begin{theorem} \label{thm:

***

}

Proposition \begin{proposition} \label{prop:

***

}

Lemma \begin{lemma} \label{lem:

***

}

Corollary \begin{corollary} \label{cor:

***

}

Conjecture \begin{conjecture} \label{conj:

***

}

Definition \begin{definition} \label{def:

***

}

Example \begin{example} \label{ex:

***

}

Problem \begin{problem} \label{prob:

***

}

Question \begin{question} \label{qn:

***

}

Open problem \begin{open} \label{open:

***

}

Remark \begin{remark} \label{rmk:

***

}

Claim \begin{claim} \label{clm:

***

}

Fact \begin{fact} \label{fact:

***

}

Chapter \chapter{...} \label{ch:

***

}

Section \section{...} \label{sec:

***

}

Subsection \subsection{...} \label{sec:

***

}

Figure \begin{figure} \label{fig:

***

}

Table \begin{table} \label{tab:

***

}

Equation \begin{equation} \label{eq:

***

}

Align \begin{align} \label{eq:

***

}

Multline \begin{multline} \label{eq:

***

}

Do not use eqnarray

Table 1: Format for labels

Citations It is your responsibility to look up citations and insert

them whenever appropriate. Use the following formats. These cus-

tom commands provide hyperlink to the appropriate sources in the

PDF.

• For modern published articles, look up the article on MathSciNet. https://mathscinet.ams.org/

Find its MR number (the number following MR... and remove

leading zeros), and use the following command:

\citemr{author(s) (year)}{MR number}

E.g.,

\citemr{Green and Tao (2008)}{2415379} Green and Tao (2008)

• For not-yet-published or unpublished articles that are available on

the preprint server arXiv, use

\citearxiv{author(s) (year+)}{arXiv number}

E.g.,

11

\citearxiv{Peluse (2019+)}{1909.00309} Peluse (2019+)

Do not use this format if the paper is indexed on MathSciNet.

• For less standard references, or those that are not available on

MathSciNet or arXiv, use

\citeurl{author(s) (year)}{url}

E.g.,

\citeurl{Schur (1916)}{https://eudml.org/doc/145475} Schur (1916)

• In rare instances, for very old references or those for which no

representative URL is available, use

\citecustom{bibliographic data}

E.g.,

\citecustom{B.~L.~van der Waerden, Beweis einer Baudetschen B. L. van der Waerden, Beweis einer

Baudetschen Vermutung. Nieuw

Arch. Wisk. 15, 212–216, 1927.

Vermutung. \textit{Nieuw Arch.~Wisk.} \textbf{15}, 212--216,

1927.}

Figures Draw figures whenever they would help in understanding

the written text. For this document, the two acceptable methods of

figure drawing are:

https://www.overleaf.com/learn/

latex/TikZ

_

package

• (Preferred) TikZ allows you to produce high quality figures by

writing code directly in LaTeX. It is a useful skill to learn!

https://ipe.otfried.org

• IPE is an easier to use WYSIWYG program that integrates well

with LaTeX in producing math formulas inside figures. You should

include the figure as PDF in the graphics/ subdirectory.

Unacceptable formats include: hand-drawn figures, MS Paint, . . . .

Ask me if you have a strong reason to want to use another vector-

graphics program.

Macros See macros.tex for existing macros. In particular, black-

board bold letters such as R can be entered as \RR.

While you may add to macros.tex, you are discouraged from

doing so unless there is a good reason. In particular, do not add a

macro if it will only be used a few times.

https://en.wikibooks.org/wiki/

LaTeX/Special

_

Characters

Accents Accent marks in names should be respected, e.g., \H{o} for

the ˝o in Erd˝os, and \’e for the é in Szemerédi.

12

https://github.com/Tufte-LaTeX/

tufte-latex

Tufte This book is formatted using the tufte-book class. See the

Tufte-LaTeX example and source for additional functionalities, in-

cluding:

• \marginnote{...} for placing text in the right margin;

• \begin{marginfigure} ...\end{marginfigure} for placing figures

in the right margin

• \begin{fullwidth} ...\end{fullwidth} for full width texts.

The headings subsubsections and subparagraph are unsupported.

Minimize the use of subsection unless there is a good reason.

Version labels It would be helpful if you could add an Overleaf ver-

sion label (top-right corner in browser. . . History . . . Label this version)

after major milestones (e.g., completion of notes for a lecture).

1

Introduction

1.1 Schur’s theorem

9/9: Yufei Zhao

In the 1910’s, Schur attempted to prove Fermat’s Last Theorem by

Schur (1916)

reducing the equation X

n

+ Y

n

= Z

n

modulo a prime p. However,

he was unsuccessful. It turns out that, for every positive integer n,

the equation has nontrivial solutions mod p for all sufficiently large

primes p, which Schur established by proving the following classic

result.

Theorem 1.1 (Schur’s theorem). If the positive integers are colored with

finitely many colors, then there is always a monochromatic solution to x +

y = z (i.e., x, y, z all have the same color).

We will prove Schur’s theorem shortly. But first, let us show how

to deduce the existence of solutions to X

n

+ Y

n

≡ Z

n

(mod p) using

Schur’s theorem.

Schur’s theorem is stated above in its “infinitary” (or qualitative)

form. It is equivalent to a “finitary” (or quantitative) formulation

below.

We write [N] := {1, 2, . . . , N}.

Theorem 1.2 (Schur’s theorem, finitary version). For every positive

integer r, there exists a positive integer N = N(r) such that if the elements

of [N] are colored with r colors, then there is a monochromatic solution to

x + y = z with x, y, z ∈ [N].

With the finitary version, we can also ask quantitative questions

such as how big does N(r) have to be as a function of r. For most

questions of this type, we do not know the answer, even approxi-

mately.

Let us show that the two formulations, Theorem 1.1 and Theo-

rem 1.2, are equivalent. It is clear that the finitary version of Schur’s

theorem implies the infinitary version. To see that the infinitary ver-

sion implies the finitary version, fix r, and suppose that for every

14 schur’s theorem

N there is some coloring φ

N

: [N] → [r] that avoids monochro-

matic solutions to x + y = z. We can take an infinite subsequence

of (φ

N

) such that, for every k ∈ N, the value of φ

N

(k) stabilizes as

N increases along this subsequence. Then the φ

N

’s, along this subse-

quence, converges pointwise to some coloring φ : N → [r] avoiding

monochromatic solutions to x + y = z, but this contradicts the infini-

tary statement.

Let us now deduce Schur’s claim about X

n

+ Y

n

≡ Z

n

(mod p).

Theorem 1.3. Let n be a positive integer. For all sufficiently large primes Schur (1916)

p, there are X, Y, Z ∈ {1, . . . , p −1} such that X

n

+ Y

n

≡ Z

n

(mod p).

Proof of Theorem 1.3 assuming Schur’s theorem (Theorem 1.2). We write

(Z/pZ)

×

for the group of nonzero residues mod p under multi-

plication. Let H be the subgroup of n-th powers in (Z/pZ)

×

. The

index of H in (Z/pZ)

×

is at most n. So the cosets of H partition

{1, 2, . . . , p − 1} into at most n sets. By the finitary statement of

Schur’s theorem (Theorem 1.2), for p large enough, there is a solu-

tion to

x + y = z in Z

in one of the cosets of H, say aH for some a ∈ (Z/pZ)

×

. Since H

consists of n-th powers, we have x = aX

n

, y = aY

n

, and z = aZ

n

for

some X, Y, Z ∈ (Z /pZ)

×

. Thus

aX

n

+ aY

n

≡ aZ

n

(mod p).

Hence

X

n

+ Y

n

≡ Z

n

(mod p)

as desired.

Now let us prove Theorem 1.2 by deducing it from a similar

sounding result about coloring the edges of a complete graph. The

next result is a special case of Ramsey’s theorem.

Theorem 1.4. For every positive integer r, there is some integer N = N(r) Ramsey (1929)

such that if the edges of K

N

, the complete graph on N vertices, are colored

with r colors, then there is always a monochromatic triangle.

frank ramsey (1903–1930) had made

major contributions to mathematical

logic, philosophy, and economics,

before his untimely death at age 26

after suffering from chronic liver

problems.

Proof. We use induction on r. Clearly N(1) = 3 works for r = 1. Let

r ≥ 2 and suppose that the claim holds for r −1 colors with N = N

0

.

We will prove that taking N = r(N

0

−1) + 2 works for r colors..

Suppose we color the edges of a complete graph on r(N

0

−1) + 2

vertices using r colors. Pick an arbitrary vertex v. Of the r(N

0

−1) + 1

edges incident to v, by the pigeonhole principle, at least N

0

edges in-

cident to v have the same color, say red. Let V

0

be the vertices joined

to v by a red edge. If there is a red edge inside V

0

, we obtain a red

introduction 15

triangle. Otherwise, there are at most r − 1 colors appearing among

|V

0

| ≥ N

0

vertices, and we have a monochromatic triangle by induc-

tion.

We are now ready to prove Schur’s theorem by setting up a graph

whose triangles correspond to solutions to x + y = z, thereby allow-

ing us to “transfer” the above result to the integers.

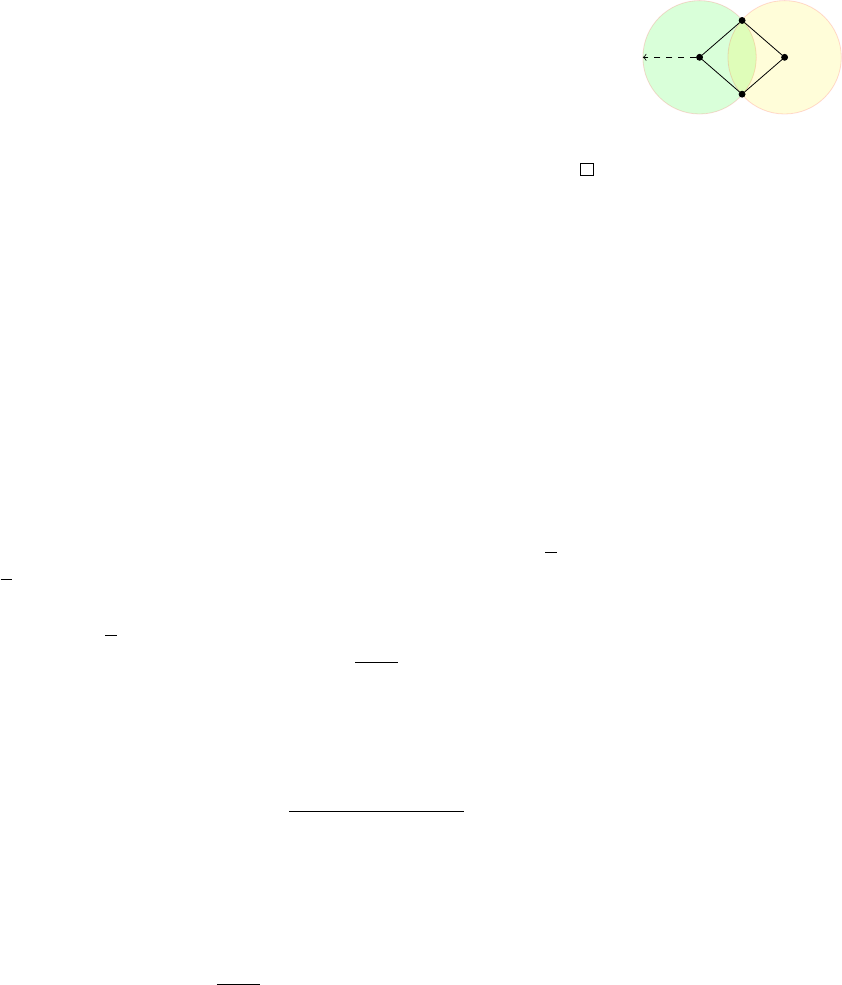

i j

k

φ(j −i) φ( k − j)

φ(k −i)

Proof of Schur’s theorem (Theorem 1.2). Let φ : [N] → [r] be a coloring.

Color the edges of a complete graph with vertices {1, . . . , N + 1} by

giving the edge {i, j} with i < j the color φ(j − i). By Theorem 1.4,

if N is large enough, then there is a monochromatic triangle, say on

vertices i < j < k. So φ (j − i) = φ(k − j) = φ(k − i). Take x = j − i,

y = k − j, and z = k −i. Then φ(x) = φ(y) = φ(z) and x + y = z, as

desired.

Notice how we solved a number theory problem by moving over

to a graph theoretic setup. The Ramsey theorem argument in Theo-

rem 1.4 is difficult to do directly inside the integers. Thus we gained

greater flexibility by considering graphs. Later on we will see other

more sophisticated examples of this idea, where taking a number

theoretic problem to the land of graph theory gives us a new perspec-

tive.

1.2 Highlights from additive combinatorics

Schur’s theorem above is one of the earliest examples of an area now

known as additive combinatorics, which is a term coined by Terry Green (2009)

Tao in the early 2000’s to describe a rapidly growing body of math-

ematics motivated by simple-to-state questions about addition and

multiplication of integers. The problems and methods in additive

combinatorics are deep and far-reaching, connecting many different

areas of mathematics such as graph theory, harmonic analysis, er-

godic theory, discrete geometry, and model theory. The rest of this

section highlights some important developments in additive combi-

natorics in the past century.

In the 1920’s, van der Waerden proved the following result about

monochromatic arithmetic progressions in the integers.

Theorem 1.5 (van der Waerden’s theorem). If the integers are colored B. L. van der Waerden, Beweis einer

Baudetschen Vermutung. Nieuw

Arch. Wisk. 15, 212–216, 1927.

with finitely many colors, then one of the color classes must contain arbi-

trarily long arithmetic progressions.

Remark 1.6. Having arbitrarily long arithmetic progressions is very

different from having infinitely long arithmetic progressions. As an

exercise, show that one can color the integers using just two colors so

16 highlights from additive combinatorics

that there are no infinitely long monochromatic arithmetic progres-

sions.

In the 1930’s, Erd˝os and Turán conjectured a stronger statement, Erd˝os and Turán (1936)

that any subset of the integers with positive density contains arbitrar-

ily long arithmetic progressions. To be precise, we say that A ⊆ Z

has positive upper density if

lim sup

N→∞

|A ∩{−N, . . . , N}|

2N + 1

> 0.

(There are several variations of this definition—the exact formulation

is not crucial.)

endre szemerédi (1940– ) received

the prestigious Abel Prize in 2012

“for his fundamental contributions to

discrete mathematics and theoretical

computer science, and in recognition

of the profound and lasting impact of

these contributions on additive number

theory and ergodic theory.”

In the 1950’s, Roth proved the conjecture for 3-term arithmetic

progression using Fourier analytic methods. In the 1970’s, Szemerédi

fully settled the conjecture using combinatorial techniques. These are

landmark theorems in the field. Much of what we will discuss are

motivated by these results and the developments around them.

Theorem 1.7 (Roth’s theorem). Every subset of the integers with positive

Roth (1953)

upper density contains a 3-term arithmetic progression.

Theorem 1.8 (Szemerédi’s theorem). Every subset of the integers with Szemerédi (1975)

positive upper density contains arbitrarily long arithmetic progressions.

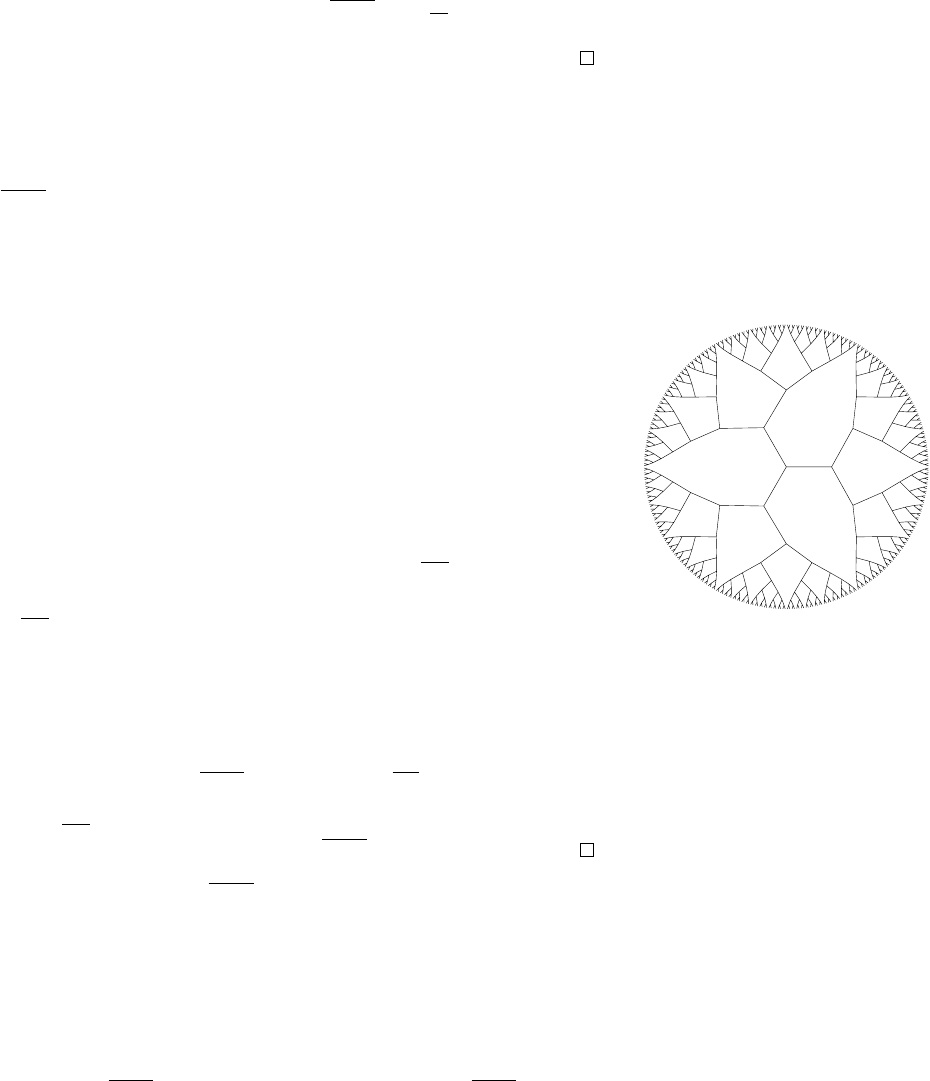

Szemerédi’s proof was a combinatorial

tour de force. This figures is taken

from the introduction of his paper

showing the logical dependencies of his

argument.

Szemerédi’s theorem is deep and intricate. This important work

led to many subsequent developments in additive combinatorics.

Several different proofs of Szemerédi’s theorem have since been

discovered, and some of them have blossomed into rich areas of

mathematical research. Here are some the most influential modern

proofs of Szemerédi’s theorem (in historical order):

• The ergodic theoretic approach (Furstenberg)

Furstenberg (1977)

• Higher-order Fourier analysis (Gowers)

Gowers (2001)

• Hypergraph regularity lemma (Rödl et al./Gowers)

Rödl et al. (2005)

Gowers (2007)

Another modern proof of Szemerédi’s theorem results from the

density Hales–Jewett theorem, which was originally proved by Fursten-

berg and Katznelson using ergodic theory, and subsequently a new Furstenberg and Katznelson (1991)

Polymath (2012)

All subsequent Polymath Project papers

are written under the pseudonym

D. H. J. Polymath, whose initials stand

for “density Hales–Jewett.”

combinatorial proof was found in the first successful Polymath

Project, an online collaborative project initiated by Gowers.

The relationships between these disparate approaches are not yet

completely understood, and there are many open problems, espe-

cially regarding quantitative bounds. A unifying theme underlying

all known approaches to Szemerédi’s theorem is the dichotomy be- Tao (2007)

tween structure and pseudorandomness. We will later see different

introduction 17

facets of this dichotomy both in the context of graph theory as well as

in number theory.

Here are a few other important subsequent developments to Sze-

merédi’s theorem.

Instead of working over subsets of integers, let us consider subsets

of a higher dimensional lattice Z

d

. We say that A ⊂ Z

d

has positive

upper density if

lim sup

N→∞

|A ∩[−N, N]

d

|

(2N + 1)

d

> 0

(as before, other similar definitions are possible). We say that A con-

tains arbitrary constellations if for every finite set F ⊂ Z

d

, there is

some a ∈ Z

d

and t ∈ Z

>0

such that a + t · F = {a + tx : x ∈ F} is

contained in A. In other words, A contains every finite pattern, each

consisting of some finite subset of the integer grid allowing dilation

and translation. The following multidimensional generalization of

Szemerédi’s theorem was proved by Furstenberg and Katznelson ini-

tially using ergodic theory, though a combinatorial proof was later

discovered as a consequence of the hypergraph regularity method

mentioned earlier.

Theorem 1.9 (Multidimensional Szemerédi theorem). Every subset of Furstenberg and Katznelson (1978)

Z

d

of positive upper density contains arbitrary constellations.

For example, the theorem implies that every subset of Z

d

of pos-

itive upper density contains a 10 × 10 set of points that form an

axis-aligned square grid.

There is also a polynomial extension of Szemerédi’s theorem. Let

us first state a special case, originally conjectured by Lovász and

proved independently by Furstenberg and Sárk˝ozy.

Theorem 1.10. Any subset of the integers with positive upper density Furstenberg (1977)

Sárközy (1978)

contains two numbers differing by a square.

In other words, the set always contains {x, x + y

2

} for some x ∈ Z

and y ∈ Z

>0

. What about other polynomial patterns? The following

polynomial generalization was proved by Bergelson and Leibman.

Theorem 1.11 (Polynomial Szemerédi theorem). Suppose A ⊂ Z Bergelson and Leibman (1996)

has positive upper density. If P

1

, . . . , P

k

∈ Z[X] are polynomials with

P

1

(0) = ··· = P

k

(0) = 0, then there exist x ∈ Z and y ∈ Z

>0

such that

x + P

1

(y), . . . , x + P

k

(y) ∈ A.

We leave it as an exercise to formulate a common extension of the

above two theorems (i.e., a multidimensional polynomial Szemerédi

theorem). Such a theorem was also proved by Bergelson and Leib-

man.

18 what’s next?

We will not cover the proof of Theorems 1.9 and 1.11. In fact,

currently the only known general proof of the polynomial Szemerédi

theorem uses ergodic theory, though for special cases there are some

recent exciting developments. Peluse (2019+)

Building on Szemerédi’s theorem as well as other important de-

velopments in number theory, Green and Tao proved their famous

theorem that settled an old folklore conjecture about prime numbers.

Their theorem is considered one of the most celebrated mathematical

results this century.

Theorem 1.12 (Green–Tao theorem). The primes contain arbitrarily long Green and Tao (2008)

arithmetic progressions.

We will discuss many central ideas behind the proof of the Green–

Tao theorem. See the reference on the right for a modern exposition Conlon, Fox, and Zhao (2014)

of the Green–Tao theorem emphasizing the graph theoretic perspec-

tive, and incorporating some simplifications of the proof that have

been found since the original work.

1.3 What’s next?

One of our goals is to understand two different proofs of Roth’s

theorem, which can be rephrased as:

Theorem 1.13 (Roth’s theorem). Every subset of [N] that does not con-

tain 3-term arithmetic progressions has size o(N).

Roth originally proved his result using Fourier analytic techniques,

which we will see in the second half of this book. In the 1970’s, lead-

ing up to Szemerédi’s proof of his landmark result, Szemerédi de- Szemerédi (1978)

veloped an important tool known as the graph regularity lemma.

Ruzsa and Szemerédi used the graph regularity lemma to give a new Ruzsa and Szemerédi (1978)

graph theoretic proof of Roth’s theorem. One of our first goals is to

understand this graph theoretic proof.

As in the proof of Schur’s theorem, we will formulate a graph

theoretic problem whose solution implies Roth’s theorem. This topic

fits nicely in an area of combinatorics called extremal graph theory. A

starting point (historically and also pedagogically) in extremal graph

theory is the following question:

Question 1.14. What is the maximum number of edges in a triangle-

free graph on n vertices?

This question is relatively easy, and it was answered by Mantel in

the early 1900’s (and subsequently rediscovered and generalized by

Turán). It will be the first result that we shall prove next. However,

even though it sounds similar to Roth’s theorem, it cannot be used to

introduction 19

deduce Roth’s theorem. Later on, we will construct a graph that cor-

responds to Roth’s theorem, and it turns out that the right question

to ask is:

Question 1.15. What is the maximum number of edges in an n-vertex

graph where every edge is contained in a unique triangle?

This innocent looking question turns out to be incredible myste-

rious. We are still far from knowing the truth. We will later prove,

using Szemerédi’s regularity lemma, that any such graph must have

o(n

2

) edges, and we will then deduce Roth’s theorem from this graph

theoretic claim.

Part I

Graph theory

2

Forbidding subgraphs

9/11: Anlong Chua and Chris Xu

2.1 Mantel’s theorem: forbidding a triangle

We begin our discussion of extremal graph theory with the following

basic question.

Question 2.1. What is the maximum number of edges in an n-vertex

graph that does not contain a triangle?

Bipartite graphs are always triangle-free. A complete bipartite

graph, where the vertex set is split equally into two parts (or differing

by one vertex, in case n is odd), has

n

2

/4

edges. Mantel’s theorem

states that we cannot obtain a better bound:

Theorem 2.2 (Mantel). Every triangle-free graph on n vertices has at W. Mantel, "Problem 28 (Solution by H.

Gouwentak, W. Mantel, J. Teixeira de

Mattes, F. Schuh and W. A. Wythoff).

Wiskundige Opgaven 10, 60 —61, 1907.

most bn

2

/4c edges.

We will give two proofs of Theorem 2.2.

Proof 1. Let G = (V, E) a triangle-free graph with n vertices and m

edges. Observe that for distinct x, y ∈ V such that xy ∈ E, x and y

must not share neighbors by triangle-freeness.

x

y

N(x)

N(y)

Adjacent vertices have disjoint neigh-

borhoods in a triangle-free graph.

Therefore, d(x) + d(y) ≤ n, which implies that

∑

x∈V

d(x)

2

=

∑

xy∈E

(

d(x) + d(y)

)

≤ mn.

On the other hand, by the handshake lemma,

∑

x∈V

d(x) = 2m. Now

by the Cauchy–Schwarz inequality and the equation above,

4m

2

=

∑

x∈V

d(x)

!

2

≤ n

∑

x∈V

d(x)

2

!

≤ mn

2

;

hence m ≤ n

2

/4. Since m is an integer, this gives m ≤ bn

2

/4c.

Proof 2. Let G = (V, E) be as before. Since G is triangle-free, the

neighborhood N(x) of every vertex x ∈ V is an independent set.

x

y

z

An edge within N(x) creates a triangle

24 turán’s theorem: forbidding a clique

Let A ⊆ V be a maximum independent set. Then d(x) ≤ |A| for

all x ∈ V. Let B = V \ A. Since A contains no edges, every edge of G

intersects B. Therefore,

e(G) ≤

∑

x∈B

d(x) ≤ |A||B|

≤

AM-GM

$

|A| + |B|

2

2

%

=

n

2

4

.

Remark 2.3. For equality to occur in Mantel’s theorem, in the above

proof, we must have

• e(G) =

∑

x∈B

d(x), which implies that no edges are strictly in B.

•

∑

x∈B

d(x) = |A||B|, which implies that every vertex in B is com-

plete in A.

• The equality case in AM-GM must hold (or almost hold, when n is

odd), hence

|

|A| − |B|

|

≤ 1.

Thus a triangle-free graph on n vertices has exactly

n

2

/4

edges if

and only if it is the complete bipartite graph K

b

n/2

c

,

d

n/2

e

.

2.2 Turán’s theorem: forbidding a clique

Motivated by Theorem 2.2, we turn to the following more general

question.

Question 2.4. What is the maximum number of edges in a K

r+1

-free

graph on n vertices?

Extending the bipartite construction earlier, we see that an r-partite

graph does not contain any copy of K

r+1

.

Definition 2.5. The Turán graph T

n,r

is defined to be the complete,

n-vertex, r-partite graph, with part sizes either

n

r

or

n

r

.

The Turán graph T

10,3

In this section, we prove that T

n,r

does, in fact, maximize the num-

ber of edges in a K

r

-free graph:

Theorem 2.6 (Turán). If G is an n-vertex K

r+1

-free graph, then e(G) ≤

P. Turán, On an extremal problem in

graph theory. Math. Fiz. Lapok 48, 436

—452, 1941.

e(T

n,r

).

When r = 2, this is simply Theorem 2.2.

We now give three proofs of Theorem 2.6. The first two are in the

same spirit as the proofs of Theorem 2.2.

forbidding subgraphs 25

Proof 1. Fix r. We proceed by induction on n. Observe that the state-

ment is trivial if n ≤ r, as K

n

is K

r+1

-free. Now, assume that n > r

and that Turán’s theorem holds for all graphs on fewer than n ver-

tices. Let G be an n-vertex, K

r+1

-free graph with the maximum pos-

sible number of edges. Note that G must contain K

r

as a subgraph,

or else we could add an edge in G and still be K

r+1

-free. Let A be the

vertex set of an r-clique in G, and let B := V\A. Since G is K

r+1

-free,

every v ∈ B has at most r −1 neighbors in A. Therefore

e(G) ≤

r

2

+ ( r −1)|B| + e(B)

≤

r

2

+ ( r −1)(n −r) + e(T

n−r,r

)

= e(T

n,r

).

The first inequality follows from counting the edges in A, B, and

everything in between. The second inequality follows from the in-

ductive hypothesis. The last equality follows by noting removing

one vertex from each of the r parts in T

n,r

would remove a total of

(

r

2

)

+ ( r −1)(n −r) edges.

Proof 2 (Zykov symmetrization). As before, let G be an n-vertex, K

r+1

-

free graph with the maximum possible number of edges.

We claim that the non-edges of G form an equivalence relation;

that is, if xy, yz /∈ E, then xz /∈ E. Symmetry and reflexivity are easy

to check. To check transitivity, Assume for purpose of contradiction

that there exists x, y, z ∈ V for which xy, yz /∈ E but xz ∈ E.

If d(y) < d(x), we may replace y with a “clone” of x. That is, we

delete y and add a new vertex x

0

whose neighbors are precisely the

as the neighbors of x (and no edge between x and x

0

). (See figure on

the right.)

x

x

0

x and its clone x

0

Then, the resulting graph G

0

is also K

r+1

-free since x was not in

any K

r+1

. On the other hand, G

0

has more edges than G, contradict-

ing maximality.

Therefore we have that d(y) ≥ d(x) for all xy /∈ E. Similarly,

d(y) ≥ d(z). Now, replace both x and z by “clones” of y. The new

graph G

0

is K

r+1

-free since y was not in any K

r+1

, and

e(G

0

) = e(G) − ( d(x) + d(z) −1) + 2d(y) > e(G),

contradicting maximality of e(G). Therefore such a triple (x, y, z)

cannot exist in G, and transitivity holds.

The equivalence relation shows that the complement of G is a

union of cliques. Therefore G is a complete multipartite graph with

at most r parts. One checks that increasing the number of parts in-

creases the number of edges in G. Similarly, one checks that if the

26 hypergraph turán problem

number of vertices in two parts differ by more than 1, moving one

vertex from the larger part to the smaller part increases the number

of edges in G. It follows that the graph that achieves the maximum

number of edges is T

n,r

.

Our third and final proof uses a technique called the probabilistic

method. In this method, one introduces randomness to a determinis-

tic problem in a clever way to obtain deterministic results.

Proof 3. Let G = (V, E) be an n-vertex, K

r+1

-free graph. Consider a

uniform random ordering σ of the vertices. Let

X = {v ∈ V : v is adjacent to all earlier vertices in σ}.

Observe that the set of vertices in X form a clique. Since the permuta-

tion was chosen uniformly at random, we have

P(v ∈ X) = P(v appears before all non-neighbors) =

1

n − d(v)

.

Therefore,

r ≥ E|X| =

∑

v∈V

P(v ∈ X) =

∑

v∈V

1

n − d(v)

convexity

≥

n

n −2m/n

.

Rearranging gives m ≤

1 −

1

r

n

2

2

(a bound that is already good for

most purposes). Note that if n is divisible by r, then the bound imme-

diately gives a proof of Turán’s theorem. When n is not divisible by

r, one needs to a bit more work and use convexity to argue that the

d(v) should be as close as possible. We omit the details.

2.3 Hypergraph Turán problem

The short proofs given in the previous sections make problems in

extremal graph theory seem deceptively simple. In reality, many

generalizations of what we just discussed remain wide open.

Here we discuss one notorous open problem that is a hypergraph

generalization of Mantel/Turán.

An r-uniform hypergraph consists of a vertex set V and an edge

set, where every edge is now an r-element subset of V. Graphs corre-

spond to r = 2.

Question 2.7. What is the maximum number of triples in an n vertex

3-uniform hypergraph without a tetrahedron?

Turán proposed the following construction, which is conjectured to

be optimal.

forbidding subgraphs 27

Example 2.8 (Turán). Let V be a set of n vertices. Partition V into

3 (roughly) equal sets V

1

, V

2

, V

3

. Add a triple {x, y, z} to e(G) if it

satisfies one of the four following conditions:

• x, y, z are in different partitions

• x, y ∈ V

1

and z ∈ V

2

• x, y ∈ V

2

and z ∈ V

3

• x, y ∈ V

3

and z ∈ V

1

where we consider x, y, z up to permutation (See Example 2.8). One

checks that the 3-uniform hypergraph constructed is tetrahedron-free,

and that it has edge density 5/9.

Turán’s construction of a tetrahedron-

free 3-uniform hypergraph

On the other hand, the best known upper bound is approximately

0.562 , obtained recently using the technique of flag algebras.

Keevash (2011)

Baber and Talbot (2011)

Razborov (2010)

2.4 Erd˝os–Stone–Simonovits theorem (statement): forbidding a

general subgraph

One might also wonder what happens if K

r+1

in Theorem 2.6 were

replaced with an arbitrary graph H:

Question 2.9. Fix some graph H. If G is an n vertex graph in which

H does not appear as a subgraph, what is the maximum possible

number of edges in G?

Notice that we only require H to be a

subgraph, not necessarily an induced

subgraph. An induced subgraph H

0

of G must contain all edges present

between the vertices of H

0

, while there

is no such restriction for arbitrary

subgraphs.

Definition 2.10. For a graph H and n ∈ N, define ex(n, H) to be the

maximum number of edges in an n-vertex H-free graph.

For example, Theorem 2.6 tells us that for any given r,

ex(n, K

r+1

) = e(T

n,r

) =

1 −

1

r

+ o(1)

n

2

where o(1) represents some quantity that goes to zero as n → ∞.

At a first glance, one might not expect a clean answer to Ques-

tion 2.9. Indeed, the solution would seem to depend on various char-

acteristics of H (for example, its diameter or maximum degree). Sur-

prisingly, it turns out that a single parameter, the chromatic number

of H, governs the growth of ex(n, H).

Definition 2.11. The chromatic number of a graph G, denoted χ(G),

is the minimal number of colors needed to color the vertices of G

such that no two adjacent vertices have the same color.

Example 2.12. χ( K

r+1

) = r + 1 and χ(T

n,r

) = r.

28 k

˝

ovári–sós–turán theorem: forbidding a complete bipartite graph

Observe that if H ⊆ G, then χ(H) ≤ χ(G). Indeed, any proper

coloring of G restricts to a proper coloring of H. From this, we gather

that if χ(H) = r + 1, then T

n,r

is H-free. Therefore,

ex(n, H) ≥ e(T

n,r

) =

1 −

1

r

+ o(1)

n

2

.

Is this the best we can do? The answer turns out to be affirmative.

Theorem 2.13 (Erd˝os–Stone–Simonovits). For all graphs H, we have Erd˝os and Stone (1946)

Erd˝os and Simonovits (1966)

lim

n→∞

ex(n, H)

(

n

2

)

= 1 −

1

χ(H) −1

.

We’ll skip the proof for now.

Remark 2.14. Later in the book we will show how to deduce Theo-

rem 2.13 from Theorem 2.6 using the Szemerédi regularity lemma.

Example 2.15. When H = K

3

, Theorem 2.13 tells us that

lim

n→∞

ex(n, H)

(

n

2

)

=

1

2

,

in agreement with Theorem 2.6.

When H = K

4

, we get

lim

n→∞

ex(n, H)

(

n

2

)

=

2

3

,

also in agreement with Theorem 2.6.

When H is the Peterson graph, Theorem 2.13 tells us that

lim

n→∞

ex(n, H)

(

n

2

)

=

1

2

,

which is the same answer as for H = K

3

! This is surprising since the

Peterson graph seems much more complicated than the triangle.

1

2

3

1

3

2

2

3

2

1

The Peterson graph with a proper

3-coloring.

2.5 K˝ovári–Sós–Turán theorem: forbidding a complete bipartite

graph

9/16: Jiyang Gao and Yinzhan Xu

The Erd˝os–Stone–Simonovits Theorem (Theorem 2.13) gives a first-

order approximation of ex(n, H) when χ(H) > 2. Unfortunately,

Theorem 2.13 does not tell us the whole story. When χ(H) = 2, i.e.

H is bipartite, the theorem implies that ex(n, H) = o(n

2

), which com-

pels us to ask if we may obtain more precise bounds. For example, if

we write ex(n, H) as a function of n, what its growth with respect to

n? This is an open problem for most bipartite graphs (for example,

K

4,4

) and the focus of the remainder of the chapter.

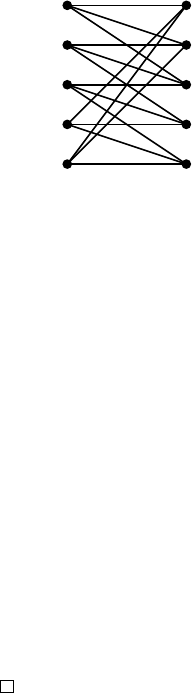

Let K

s,t

be the complete bipartite graph where the two parts of the

bipartite graph have s and t vertices respectively. In this section, we

consider ex(n, K

s,t

), and seek to answer the following main question:

An example of a complete bipartite

graph K

3,5

.

forbidding subgraphs 29

Question 2.16 (Zarankiewicz problem). For some r, s ≥ 1, what is

the maximum number of edges in an n-vertex graph which does not

contain K

s,t

as a subgraph.

Every bipartite graph H is a subgraph of some complete bipartite

graph K

s,t

. If H ⊆ K

s,t

, then ex(n, H) ≤ ex(n, K

s,t

). Therefore, by

understanding the upper bound on the extremal number of complete

bipartite graphs, we obtain an upper bound on the extremal number

of general bipartite graphs as well. Later, we will give improved

bounds for several specific biparite graphs.

K˝ovári, Sós and Turán gave an upper bound on K

s,t

:

Theorem 2.17 (K˝ovári–Sós–Turán). For every integers 1 ≤ s ≤ t, there K˝ovári, Sós, and Turán (1954)

exists some constant C, such that

ex(n, K

s,t

) ≤ Cn

2−

1

s

.

There is an easy way to remember

the name of this theorem: “KST”, the

initials of the authors, is also the letters

for the complete bipartite graph K

s,t

.

Proof. Let G be a K

s,t

-free n-vertex graph with m edges.

First, we repeatedly remove all vertices v ∈ V(G) where d(v) <

s −1. Since we only remove at most (s −2)n edges this way, it suffices

to prove the theorem assuming that all vertices have degree at least

s −1.

We denote the number of copies of K

s,1

in G as #K

s,1

. The proof

establishes an upper bound and a lower bound on #K

s,1

, and then

gets a bound on m by combining the upper bound and the lower

bound.

Since K

s,1

is a complete bipartite graph, we can call the side with s

vertices the ‘left side‘, and the side with 1 vertices the ‘right side‘.

On the one hand, we can count #K

s,1

by enumerating the ‘left side‘.

For any subset of s vertices, the number of K

s,1

where these s vertices

form the ‘left side‘ is exactly the number of common neighbors of

these s vertices. Since G is K

s,t

-free, the number of common neigh-

bors of any subset of s vertices is at most t − 1. Thus, we establish

that #K

s,1

≤

(

n

s

)

(t −1).

On the other hand, for each vertex v ∈ V(G), the number of copies

of K

s,1

where v is the ‘right side‘ is exactly

(

d(v)

s

)

. Therefore, Here we regard

(

x

s

)

as a degree s poly-

nomial in x, so it makes sense for x to

be non-integers. The function

(

x

s

)

is

convex when x ≥ s − 1.

#K

s,1

=

∑

v∈V(G)

d(v)

s

≥ n

1

n

∑

v∈V(G)

d(v)

s

= n

2m/n

s

,

where the inequality step uses the convextiy of x 7→

(

x

s

)

.

Combining the upper bound and lower bound of #K

s,1

, we obtain

that n

(

2m/n

s

)

≤

(

n

s

)

(t − 1). For constant s, we can use

(

x

s

)

= (1 +

o(1))

n

s

s!

to get n

2m

n

s

≤ (1 + o(1))n

s

(t − 1). The above inequality

simplifies to

m ≤

1

2

+ o(1)

(t −1)

1/s

n

2−

1

s

.

30 k

˝

ovári–sós–turán theorem: forbidding a complete bipartite graph

Let us discuss a geometric application of Theorem 2.17.

Question 2.18 (Unit distance problem). What is the maximum num- Erd˝os (1946)

ber of unit distances formed by n points in R

2

?

For small values of n, we precisely know the answer to the unit

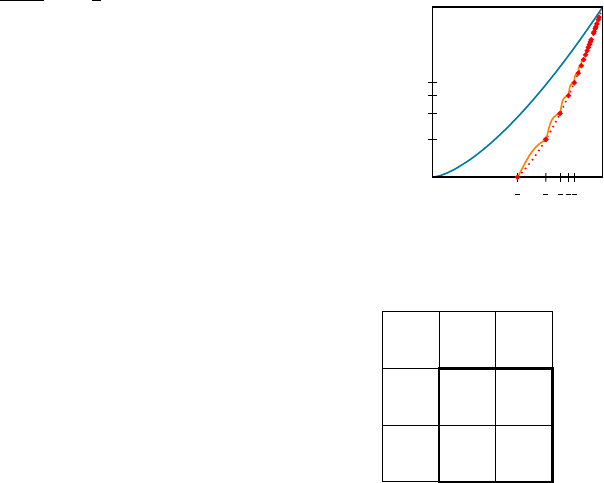

distance problem. The best configurations are listed in Figure 2.1.

n = 3 n = 4 n = 5

n = 6 n = 7

Figure 2.1: The configurations of points

for small values of n with maximum

number of unit distances. The edges

between vertices mean that the distance

is 1. These constructions are unique up

to isomorphism except when n = 6.

It is possible to generalize some of these constructions to arbitrary

n.

• A line graph has (n −1) unit distances.

···

• A chain of triangles has (2n −3) unit distances for n ≥ 3.

···

P

P

0

1

• There is also a recursive construction. Given a configuration P

with n/2 points that have f (n/2) unit distances, we can copy P

and translate it by an arbitrary unit vector to get P

0

. The configu-

ration P ∪ P

0

have at least 2 f (n/2) + n/2 unit distances. We can

solve the recursion to get f (n) = Ω(n log n).

The current best lower bound on the maximum number of unit dis-

tances is given by Erd˝os.

Proposition 2.19. There exists a set of n points in R

2

that have at least Erd˝os (1946)

n

1+c/ log log n

unit distances for some constant c.

Figure 2.2: An example grid graph

where n = 25 and r = 10.

Proof sketch. Consider a square grid with b

√

nc ×b

√

nc vertices. We

can scale the graph arbitrarily so that

√

r becomes the unit distance

for some integer r. We can pick r so that r can be represented as a

sum of two squares in many different ways. One candidate of such

r is a product of many primes that are congruent to 1 module 4. We

can use some number-theoretical theorems to analyze the best r, and

get the n

1+c/ log log n

bound.

Theorem 2.17 can be used to prove an upper bound on the number

of unit distances.

Theorem 2.20. Every set of n points in R

2

has at most O(n

3/2

) unit

distances.

Proof. Given any set of points S ⊂ R

2

, we can create the unit distance

graph G as follows:

• The vertex set of G is S,

• For any point p, q where d(p, q) = 1, we add an edge between p

and q.

forbidding subgraphs 31

p q

r = 1

Figure 2.3: Two vertices p, q can have at

most two common neighbors in the unit

distance graph.

The graph G is K

2,3

-free since for every pair of points p, q, there are at

most 2 points that have unit distances to both of them. By applying

Theorem 2.17, we obtain that e(G) = O(n

3/2

).

Remark 2.21. The best known upper bound on the number of unit Spencer, Szemerédi and Trotter (1984)

distances is O(n

4/3

). The proof is a nice application of the crossing

number inequality which will be introduced later in this book.

Here is another problem that is strongly related to the unit dis-

tance problem:

Question 2.22 (Distinct distance problem). What is the minimum

number of distinct distances formed by n points in R

2

?

Example 2.23. Consider n points on the x-axis where the i-th point

has coordinate (i, 0). The number of distinct distances for these

points is n −1.

The current best construction for minimum number of distinct

distances is also the grid graph. Consider a square grid with b

√

nc ×

b

√

nc vertices. Possible distances between two vertices are numbers

that can be expressed as a sum of the squares of two numbers that

are at most b

√

nc. Using number-theoretical methods, we can obtain

that the number of such distances: Θ(n/

p

log n).

The maximum number of unit distances is also the maximum

number that each distance can occur. Therefore, we have the follow-

ing relationship between distinct distances and unit distances:

#distinct distances ≥

(

n

2

)

max #unit distances

.

If we apply Theorem 2.20 to the above inequality, we immediately get

an Ω (n

0.5

) lower bound for the number of distinct distances. Many

mathematicians successively improved the exponent in this lower

bound over the span of seven decades. Recently, Guth and Katz gave

the following celebrated theorem, which almost matches the upper

bound (only off by an O(

p

log n)) factor).

Theorem 2.24 (Guth–Katz). Every set of n points in R

2

has at least Guth and Katz (2015)

cn/ log n distinct distances for some constant c.

The proof of Theorem 2.24 is quite sophisticated: it uses tools

ranging from polynomial method to algebraic geometry. We won’t

cover it in this book.

2.6 Lower bounds: randomized constructions

It is conjectured that the bound proven in Theorem 2.17 is tight. In

other words, ex(n, K

s,t

) = Θ(n

2−1/s

). Although this still remains

32 lower bounds: randomized constructions

open for arbitrary K

s,t

, it is already proven for a few small cases,

and in cases where t is way larger than s. In this and the next two

sections, we will show techniques for constructing H-free graphs.

Here are the three main types of constructions that we will cover:

• Randomized construction. This method is powerful and general,

but introducing randomness means that the constructions are

usually not tight.

• Algebraic construction. This method uses tools in number theory

or algebra to assist construction. It gives tighter results, but they

are usually ‘magical’, and only works in a small set of cases.

• Randomized algebraic construction. This method is the hybrid of

the two methods above and combines the advantages of both.

This section will focus on randomized constructions. We start with a

general lower bound for extremal numbers.

Theorem 2.25. For any graph H with at least 2 edges, there exists a con-

stant c > 0, such that for any n ∈ N, there exists an H-free graph on n

vertices with at least cn

2−

v(H)−2

e(H)−1

edges. In other words,

ex(n, H) ≥ cn

2−

v(H)−2

e(H)−1

.

Proof. The idea is to use the alteration method: we can construct a

graph that has few copies of H in it, and delete one edge from each

copy to eliminate the occurrences of H. The random graph G(n, p) is called

the Erd˝os–Rényi random graph, which

appears in many randomized construc-

tions.

Consider G = G(n, p) as a random graph with n vertices where

each edge appears with probability p (p to be determined). Let #H be

the number of copies of H in G. Then,

E[#H] =

n(n −1) ···(n −v(H) + 1)

|Aut(H)|

p

e(H)

≤ p

e(H)

n

v(H)

,

where Aut(H) is the automorphism group of graph H, and

E[e(G)] = p

n

2

.

Let p =

1

2

n

−

v(H)−2

e(H)−1

, chosen so that

E[#H] ≤

1

2

E[e(G)],

which further implies

E[e(G) −#H] ≥

1

2

p

n

2

≥

1

16

n

2−

v(H)−2

e(H)−1

.

Thus, there exists a graph G, such that the value of (e(G) − #H) is

at least the expectation. Remove one edge from each copy of H in G,

and we get an H-free graph with enough edges.

forbidding subgraphs 33

Remark 2.26. For example, if H is the following graph

then applying Theorem 2.25 directly gives

ex(n, H) & n

11/7

.

However, if we forbid H’s subgraph K

4

instead (forbidding a sub-

graph will automatically forbid the original graph), Theorem 2.25

actually gives us a better bound:

ex(n, H) ≥ ex(n, K

4

) & n

8/5

.

For a general H, we apply Theorem 2.25 to the subgraph of H with

the maximum (e − 1)/(v − 2) value. For this purpose, define the

2-density of H as

m

2

(H) := max

H

0

⊆H

v(H

0

)≥3

e(H

0

) −1

v(H

0

) −2

.

We have the following corollary.

Corollary 2.27. For any graph H with at least two edges, there exists

constant c = c

H

> 0 such that

ex(n, H) ≥ cn

2−1/m

2

(H)

.

Example 2.28. We present some specific examples of Theorem 2.25.

This lower bound, combined with the upper bound from the K˝ovári–

Sós–Turán theorem (Theorem 2.17), gives that for every 2 ≤ s ≤ t,

n

2−

s+t−2

st−1

. ex(n, K

s,t

) . n

2−1/s

.

When t is large compared to s, the exponents in the two bounds

above are close to each other (but never equal).

When t = s, the above bounds specialize to

n

2−

2

s+1

. n

2−

s+t−2

st−1

.. n

2−1/s

.

In particular, for s = 2, we obtain

n

4/3

. ex(n, K

2,2

) . n

3/2

.

It turns out what the upper bound is close to tight, as we show next a

different, algebraic, construction of a K

2,2

-free graph.

34 lower bounds: algebraic constructions

2.7 Lower bounds: algebraic constructions

In this section, we use algebraic constructions to find K

s,t

-free graphs,

for various values of (s, t), that match the upper bound in the K˝ovári–

Sós–Turán theorem (Theorem 2.17) up to a constant factor.

The simplest example of such an algebraic construction is the

following construction of K

2,2

-free graphs with many edges.

Theorem 2.29 (Erd˝os–Rényi–Sós). Erd˝os, Rényi and Sós (1966)

ex(n, K

2,2

) ≥

1

2

−o(1)

n

3/2

.

Proof. Suppose n = p

2

−1 where p is a prime. Consider the following

graph G (called polarity graph): Why is it called a polarity graph? It

may be helpful to first think about

the partite version of the construction,

where one vertex set is the set of points

of of a (projective) plane over F

p

, and

the other vertex set is the set of lines in

the same plane, and one has an edge

between point p and line ` if p ∈ `.

This graph is C

4

-free since no two lines

intersect in two distinct points.

The construction in the proof of

Theorem 2.29 has one vertex set that

identifies points with lines. This duality

pairing between points and lines

is known in projective geometry a

polarity.

• V(G) = F

2

p

\{(0, 0)},

• E(G) = {(x, y) ∼ (a, b)|ax + by = 1 in F

p

}.

For any two distinct vertices (a, b) 6= (a

0

, b

0

) ∈ V(G), there is at

most one solution (common neighbour) (x, y) ∈ V(G) satisfying both

ax + by = 1 and a

0

x + b

0

y = 1. Therefore, G is K

2,2

-free.

Most vertices have degree p because

the equation ax + by = 1 has exactly p

solutions (x, y). Sometimes we have to

subtract 1 because one of the solutions

might be (a, b) itself, which forms a

self-loop.

Moreover, every vertex has degree p or p −1, so the total number

of edges

e(G) =

1

2

−o(1)

p

3

=

1

2

−o(1)

n

3/2

,

which concludes our proof.

If n does not have the form p

2

− 1 for some prime, then we let p

be the largest prime such that p

2

− 1 ≤ n. Then p = (1 − o(1)n

and constructing the same graph G

p

2

−1

with n − p

2

+ 1 isolated

Here we use that the smallest prime

greater than n has size n + o(n) . The

best result of this form says that there

exists a prime in the interval [n −

n

0.525

, n] for every sufficiently large n.

Baker, Harman and Pintz (2001)

vertices.

A natural question to ask here is whether the construction above

can be generalized. The next construction gives us a construction for

K

3,3

-free graphs.

Theorem 2.30 (Brown). Brown (1966)

It is known that the constant 1/2 in

Theorem 2.30 is the best constant

possible.

ex(n, K

3,3

) ≥

1

2

−o(1)

n

5/3

Proof sketch. Let n = p

3

where p is a prime. Consider the following

graph G:

• V(G) = F

3

p

• E(G) = {(x, y, z) ∼ (a, b, c)|(a − x)

2

+ (b − y)

2

+ (c − z)

2

=

u in F

p

}, where u is some carefully-chosen fixed nonzero element

in F

p

forbidding subgraphs 35

One needs to check that it is possible to choose u so that the above

graph is K

3,3

. We omit the proof but give some intuition. Had we

used points in R

3

instead of F

3

p

, the K

3,3

-freeness is equivalent to the

statement that three unit spheres have at most two common points.

This statement about unit spheres in R

3

, and it can be proved rigor-

ously by some algebraic manipulation. One would carry out a similar

algebraic manipulation over F

p

to verify that the graph above is K

3,3

-

free.

Moreover, each vertex has degree around p

2

since the distribution

of (a −x)

2

+ (b −y)

2

+ (c −z)

2

is almost uniform across F

p

as (x, y, z)

varies randomly over F

3

p

, and so we expect roughly a 1/p fraction of

(x, y, z) to have (a −x)

2

+ (b −y)

2

+ (c −z)

2

= u. Again we omit the

details.

Although the case of K

2,2

and K

3,3

are fully solved, the correspond-

ing problem for K

4,4

is a central open problem in extremal graph

theory.

Open problem 2.31. What is the order of growth of ex(n, K

4,4

)? Is it

Θ(n

7/4

), matching the upper bound in Theorem 2.17?

9/18: Michael Ma

We have obtained the K˝ovári–Sós–Turán bound up to a constant

factor for K

2,2

and K

3,3

. Now we present a construction that matches

the K˝ovári–Sós–Turán bound for K

s,t

whenever t is sufficiently large

compared to s.

Theorem 2.32 (Alon, Kollár, Rónyai, Szabó). If t ≥ (s −1)! + 1 then Kollár, Rónyai, and Szabó (1996)

Alon, Rónyai, and Szabó (1999)

ex(n, K

s,t

) = Θ(n

2−

1

s

).

We begin by proving a weaker version for t ≥ s! + 1. This will be

similar in spirit and later we will make an adjustment to achieve the

desired bound. Take a prime p and n = p

s

with s ≥ 2. Consider the Notice that we said the image of N

lies in F

p

rather than F

p

s

. We can

easily check this is indeed the case as

N(x)

p

= N(x) .

norm map N : F

p

s

→ F

p

defined by

N(x) = x ·x

p

· x

p

2

···x

p

s−1

= x

p

s

−1

p−1

.

Define the graph NormGraph

p,s

= (V, E) with

V = F

p

s

and E = {{a, b}|a 6= b, N(a + b) = 1}.

Proposition 2.33. In NormGraph

p,s

defined as above, letting n = p

s

be the

number of vertices,

|E| ≥

1

2

n

2−

1

s

.

Proof. Since F

×

p

s

is a cyclic group of order p

s

−1 we know that

|{x ∈ F

p

s

|N(x) = 1}| =

p

s

−1

p −1

.

36 lower bounds: algebraic constructions

Thus for every vertex x (the minus one accounts for vertices with

N(x + x) = 1)

deg(x) ≥

p

s

−1

p −1

−1 ≥ p

s−1

= n

1−

1

s

.

This gives us the desired lower bound on the number of edges.

Proposition 2.34. NormGraph

p,s

is K

s,s!+1

-free.

We wish to upper bound the number of common neighbors to a

set of s vertices. We quote without proof the following result, which

can be proved using algebraic geometry.

Theorem 2.35. Let F be any field and a

ij

, b

i

∈ F such that a

ij

6= a

i

0

j

for all Kollár, Rónyai, and Szabó (1996)

i 6= i

0

. Then the system of equations

(x

1

− a

11

)(x

2

− a

12

) ···(x

s

− a

1s

) = b

1

(x

1

− a

21

)(x

2

− a

22

) ···(x

s

− a

2s

) = b

2

.

.

.

(x

1

− a

s1

)(x

2

− a

s2

) ···(x

s

− a

ss

) = b

s

has at most s! solutions in F

s

.

Remark 2.36. Consider the special case when all the b

i

are 0. In this

case, since the a

ij

are distinct for a fixed j, we are picking an i

j

for

which x

j

= a

i

j

j

. Since all the i

j

are distinct, this is equivalent to

picking a permutation on [s]. Therefore there are exactly s! solutions.

We can now prove Proposition 2.34.

Proof of Proposition 2.34. Consider distinct y

1

, y

2

, . . . , y

s

∈ F

p

s

. We

wish to bound the number of common neighbors x. We can use the

fact that in a field with characteristic p we have (x + y)

p

= x

p

+ y

p

to

obtain

1 = N(x + y

i

) = (x + y

i

)(x + y

i

)

p

. . . (x + y

i

)

p

s−1

= (x + y

i

)(x

p

+ y

p

i

) . . . (x

p

s−1

+ y

p

s−1

i

)

for all 1 ≤ i ≤ s. By Theorem 2.35 these s equations have at most

s! solutions in x. Notice we do in fact satisfy the hypothesis since

y

p

i

= y

p

j

if and only if y

i

= y

j

in our field.

Now we introduce the adjustment to achieve the bound t ≥ (s −

1)! + 1 in Theorem 2.32. We define the graph ProjNormGraph

p,s

=

(V, E) with V = F

p

s−1

×F

×

p

for s ≥ 3. Here n = (p −1)p

s−1

. Define

the edge relation as (X, x) ∼ (Y, y) if and only if

N(X + Y) = xy.

forbidding subgraphs 37

Proposition 2.37. In ProjNormGraph

p,s

defined as above, letting n =

(p −1)p

s−1

denote the number of vertices,

|E| =

1

2

−o(1)

n

2−

1

s

.

Proof. It follows from that every vertex (X, x) has degree p

s−1

−1 =

(1 −o(1))n

1−1/s

since its neighbors are (Y, N(X + Y)/x) as Y ranges

over elements of F

p

s−1

over than −X.

Now that we know we have a sufficient amount of edges we just

need our graph to be K

s,(s−1)!+1

-free.

Proposition 2.38. ProjNormGraph

p,s

is K

s,(s−1)!+1

-free.

Proof. Once again we fix distinct (Y

i

, y

i

) ∈ V for 1 ≤ i ≤ s and we

wish to find all common neighbors (X, x). Then

N(X + Y

i

) = xy

i

.

Assume this system has at least one solution. Then if Y

i

= Y

j

with i 6=

j we must have that y

i

= y

j

. Therefore all the Y

i

are distinct. For each

i < s we can take N(X + Y

i

) = xy

i

and divide by N(X + Y

s

) = xy

s

to

obtain

N

X + Y

i

X + Y

s

=

y

i

y

s

.

Dividing both sides by N(Y

i

−Y

s

) we obtain

N

1

X + Y

s

+

1

Y

i

−Y

s

=

y

i

N(Y

i

−Y

s

)y

s

for all 1 ≤ i ≤ s − 1. Now applying Theorem 2.35 there are at most

(s − 1)! choices for X, which also determines x = N(X + Y

1

)/y

1

.

Thus there are at most (s −1)! common neighbors.

Now we are ready to prove Theorem 2.32.

Proof of Theorem 2.32. By Proposition 2.37 and Proposition 2.38 we

know that ProjNormGraph

p,s

is K

s,(s−1)!+1

-free and therefore K

s,t

-free

and has

1

2

−o(1)

n

2−

1

s

edges as desired.

2.8 Lower bounds: randomized algebraic constructions

So far we have seen both constructions using random graphs and

algebraic constructions. In this section we present an alternative

construction of K

s,t

-free graphs due to Bukh with Θ(n

2−

1

s

) edges pro- Bukh (2015)

vided t > t

0

(s) for some function t

0

. This is an algebraic construction

with some randomness added to it.

38 lower bounds: randomized algebraic constructions

First fix s ≥ 4 and take a prime power q. Let d = s

2

− s + 2 and

f ∈ F

q

[x

1

, x

2

, . . . , x

s

, y

1

, y

2

, . . . , y

s

] be a polynomial chosen uniformly

at random among all polynomials with degree at most d in each

of X = (x

1

, x

2

, . . . , x

s

) and Y = (y

1

, y

2

, . . . , y

s

). Take G bipartite

with vertex parts n = L = R = F

s

q

and define the edge relation as

(X, Y) ∈ L ×R when f (X, Y) = 0.

Lemma 2.39. For all u, v ∈ F

s

q

and f chosen randomly as above

P[ f (u, v) = 0] =

1

q

.

Proof. Notice that if g is a uniformly random constant in F

q

, then

f (u, v) and f (u, v) + g are identically distributed. Hence each of the q

possibilities are equally likely to the probability is 1/q.

Now the expected number of edges is the order we want as

E[e(G)] =

n

2

q

. All that we need is for the number of copies of K

s,t

to be relatively low. In order to do so, we must answer the follow-

ing question. For a set of vertices in L of size s, how many common

neighbors can it have?

Lemma 2.40. Suppose r, s ≤ min(

√

q, d) and U, V ⊂ F

s

q

with |U| = s

and |V| = r. Furthermore let f ∈ F

q

[x

1

, x

2

, . . . , x

s

, y

1

, y

2

, . . . , y

s

] be a

polynomial chosen uniformly at random among all polynomials with degree

at most d in each of X = (x

1

, x

2

, . . . , x

s

) and Y = (y

1

, y

2

, . . . , y

s

). Then

P[ f (u, v) = 0 for all u ∈ U, v ∈ V] = q

−sr

.

Proof. First let us consider the special case where the first coordinates

of points in U and V are all distinct. Define

g(X

1

, Y

1

) =

∑

0≤i≤s−1

0≤j≤r−1

a

ij

X

i

1

Y

j

1

with a

ij

each uniform iid random variables over F

q

. We know that

f and f + g have the same distribution, so it suffices to show for

all b

uv

∈ F

q

where u ∈ U and v ∈ V there exists a

ij

for which

g(u, v) = b

uv

for all u ∈ U, v ∈ V. The idea is to apply Lagrange

Interpolation twice. First for all u ∈ U we can find a single variable

polynomial g

u

(Y

1

) with degree at most r − 1 such that g

u

(v) = b

uv

for all v ∈ V. Then we can view g(X

1

, Y

1

) as a polynomial in Y

1

with

coefficients being polynomials in X

1

, i.e.,

g(X