FFIRS 08/25/2011 11:31:15 Page 2

FFIRS 08/25/2011 11:31:15 Page 1

THE ART OF

SOFTWARE

TESTING

FFIRS 08/25/2011 11:31:15 Page 2

FFIRS 08/25/2011 11:31:15 Page 3

THE ART OF

SOFTWARE

TESTING

Third Edition

GLENFORD J. MYERS

TOM BADGETT

COREY SANDLER

John Wiley & Sons, Inc.

FFIRS 08/25/2011 11:31:15 Page 4

Copyright # 2012 by Word Association, Inc. All rights reserved.

Published by John Wiley & Sons, Inc., Hoboken, New Jersey.

Published simultaneously in Canada.

No part of this publication may be reproduced, stored in a retrieval system, or transmitted in

any form or by any means, electronic, mechanical, photocopying, recording, scanning, or

otherwise, except as permitted under Section 107 or 108 of the 1976 United States

Copyright Act, without either the prior written permission of the Publisher, or authorization

through payment of the appropriate per-copy fee to the Copyright Clearance Center, Inc.,

222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600, or on the

web at www.copyright.com. Requests to the Publisher for permission should be addressed

to the Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ

07030, (201) 748-6011, fax (201) 748-6008, or online at www.wiley.com/go/permissions.

Limit of Liability/Disclaimer of Warranty: While the publisher and author have used their

best efforts in preparing this book, they make no representations or warranties with respect

to the accuracy or completeness of the contents of this book and specifically disclaim any

implied warranties of merchantability or fitness for a particular purpose. No warranty may

be created or extended by sales representatives or written sales materials. The advice and

strategies contained herein may not be suitable for your situation. You should consult with a

professional where appropriate. Neither the publisher nor author shall be liable for any loss

of profit or any other commercial damages, including but not limited to special, incidental,

consequential, or other damages.

For general information on our other products and services or for technical support, please

contact our Customer Care Department within the United States at (800) 762-2974, outside

the United States at (317) 572-3993 or fax (317) 572-4002.

Wiley also publishes its books in a variety of electronic formats. Some content that appears

in print may not be available in electronic books. For more information about Wiley

products, visit our website at www.wiley.com.

Library of Congress Cataloging-in-Publication Data:

Myers, Glenford J., 1946-

The art of software testing / Glenford J. Myers, Corey Sandler, Tom Badgett. — 3rd ed.

p. cm.

Includes index.

ISBN 978-1-118-03196-4 (cloth); ISBN 978-1-118-13313-2 (ebk); ISBN 978-1-118-13314-9

(ebk); ISBN 978-1-118-13315-6 (ebk)

1. Computer software—Testing. 2. Debugging in computer science. I. Sandler,

Corey, 1950- II. Badgett, Tom. III. Title.

QA76.76.T48M894 2011

005.1

0

4—dc23

2011017548

Printed in the United States of America

10987654321

FTOC 08/25/2011 11:33:28 Page 5

Contents

Preface vii

Introduction ix

1 A Self-Assessment Test 1

2 The Psychology and Economics of Software Testing 5

3 Program Inspections, Walkthroughs, and Reviews 19

4 Test-Case Design 41

5 Module (Unit) Testing 85

6 Higher-Order Testing 113

7 Usability (User) Testing 143

8 Debugging 157

9 Testing in the Agile Environment 175

10 Testing Internet Applications 193

11 Mobile Application Testing 213

Appendix Sample Extreme Testing Application 227

Index 233

v

FTOC 08/25/2011 11:33:28 Page 6

FPREF 08/08/2011 17:19:4 Page 7

Preface

I

n 1979, Glenford Myers published a book that turned out to be a classic.

The Art of Software Testing has stood the test of time—25 years on the

publisher’s list of available books. This fact alone is a testament to the

solid, essential, and valuable nature of his work.

During that same time, the authors of this edition (the third) of The Art

of Software Testing pu blished, collectively, more than 200 books, most of

them on computer software topics. Some of these titles sold very well and,

like this one, have gone through multiple versions. Corey Sandler’s Fix

Your Own PC, for example, is in its eighth edition as this book goes to

press; and Tom Badgett’s books on Microsoft PowerPoint and other Office

titles have gone through four or more editions. However, unlike Myers’s

book, none of these remained current for more than a few years.

What is the difference? The newer books covered more transient

topics—operating systems, applications software, security, communica-

tions technology, and hardware configurations. Rapid changes in computer

hardware and software technology during the 1980s and 1990s necessi-

tated frequent changes and updates to these topics.

Also during that period hundreds of books about software testing were

published. They, too, took a more transient approach to the topic. The Art

of Software Testing alone gav e the industry a long-lasting, foundational

guide to one of the most important computer topics: How do you ensure

that all of the software you produce does what it was designed to do, and—

just as important—doesn’t do what it isn’t supposed to do?

The edition you are reading today retains the foundational philosophy

laid by Myers more than three decades ago. But we have updated the

examples to include more current programming languages, and we have

addressed topics that were not yet topi cs when Myers wrote the first

edition: Web p rogramming, e-commerce, Extreme (Agile) programming

and testing, and testing applications for mobile devices.

vii

FPREF 08/08/2011 17:19:4 Page 8

Along the way, we never lost sight of the fact that a new classic must stay

true to its roots, so our version also offers you a software testing philoso-

phy, and a process t hat works across current and unforeseeable future

hardware and software platforms. We hope th at th e t hird edition of The

Art of Software Testing, too, will span a generation of software designers

and developers.

viii Preface

CINTRO 08/08/2011 17:23:34 Page 9

Introduction

A

t the time this book was first published, in 1979, it was a well-known

rule of thumb that in a typical programming proje ct approximately

50 percent of the elapsed time and more than 50 percent of the total cost

were expended in testing the program or system being developed.

Today, a third of a century and two book updates later, the same holds

true. There are new development systems, languages with built-in tools,

and programmers who are used to developing more on the fly. But testing

continues to play an important part in any software development project.

Given these facts, you mi ght expect that by this time p rogram testing

would have been refined into an exact science. This is far from the case. In

fact, less seems to be known about software testing than about any other

aspect of software development. Furthermore, testing has been an out-of-

vogue subject; it was so when this book was first published and, un-

fortunately, this has not changed. Today there are more books and articles

about software testing—meaning that, at least, the topic has greater visibil-

ity t han it did when this book was first published—but testing remains

among the ‘‘dark arts’’ of software development.

This would be more than enough reason to update this book on the art

of software testing, but we have additional motivations. At various times,

we have heard professors and teaching assistants say, ‘‘Our students gradu-

ate and move into industry without any substantial knowledge of how to

go about testing a program. Moreover, we rarely have any advice to offer

in our introductory courses on how a student should go about testing and

debugging his or her exercises.’’

Thus, the purpose of this updated edition of The Art of Software Testing

is the same as it was in 1979 and in 2004: to fill these knowledge gaps for

the professional programmer and the student of computer science. As the

title implies, the book is a practical, rather than theoretical, discussion of

the subject, comple te w ith updated language and process discussions.

ix

CINTRO 08/08/2011 17:23:35 Page 10

Although it is possible to discuss program testing in a theoretical vein, this

book is int ended to be a practical, ‘‘both feet on the ground’’ handbook.

Hence, many subjects related to program testing, such as the idea of math-

ematically proving the correctness of a program, were purposefully

excluded.

Chapter 1 ‘‘assigns’’ a short self-assessment test that every reader should

take before reading further. It turns out that the most important practical

information you must understand about program testing is a set of philo-

sophical and economic issues; these are discussed in Chapter 2. Chapter 3

introduces the important concept of noncomputer-based code walk-

throughs, or inspections. Rather than focus attention on the procedural or

managerial aspects of this concept, as most such discussions do, this chap-

ter addresses it from a technical, how-to-find-errors point of view.

The alert reader will realize that the most important component in a

program tester’s bag of tricks is the knowledge of how to write effective

test cases; this is the subject of Chapter 3. Chapter 4 discusses the testing

of individual modules or subroutines, followed in Chapter 5 by the testing

of larger entities. Chapter 6 takes on the concept of user or usability test-

ing, a component of software testing that always has been important, but is

even more relevant today due to the a dvent of more complex software

targeted at an ever broadening audience. Chap ter 7 offers some practical

advice on program debugging, while Chapter 8 delves into the concepts of

extreme programming testing with emphasis on what has come to be

called the ‘‘agile environment.’’ Chapter 9 shows how to use other features

of program testing, wh ich are detailed elsewhere in this book, with Web

programming, including e-commerce systems, and the all new, highly in-

teractive social networking sites. Chapter 10 describes how to test software

developed for the mobile environment.

We direct this book at three ma jor audiences. First, the professional

programmer. Although we hope that not everything in this book will be

new information to this audience, we believe it will a dd to the profes-

sional’s knowledge of testing techniques. If the material allows this group

to detect just one more bug in one program, the price of the book will have

been recovered many times over.

The second audience is the project manager, who will benefit from the

book’s practical information on the management of the testing process.

The third audience is the programming and computer science student,

and our goal for them i s twofold: to expose them to the problems of

x Introduction

CINTRO 08/08/2011 17:23:35 Page 11

program test ing, and provide a set of effective techniques. For this third

group, we suggest the book be used as a supplement in programming

cours es such that students are exposed to the su bject of software testing

early in their education.

Introduction xi

CINTRO 08/08/2011 17:23:35 Page 12

C01 08/11/2011 11:29:16 Page 1

1

A Self-Assessment

Test

S

ince this book was first published over 30 years ago, software testing

has become more difficult and easier than ever.

Software testing is more difficult because of the vast array of program-

ming l a nguage s, operating systems, and ha rdware p latf orms that have

evolved in the intervening decades. And while relatively few people used

computers in the 1970s, today virtually no one can complete a day’s work

without using a computer. Not only do computers exist on your desk, but

a ‘‘computer,’’ and consequently software, is present in almost every device

we use. Just try to think of the devices today that society relies on that are

not software driven. Sure t here are some—hammers and whee lbarrows

come to mind—but the vast majority use some form of software to operate.

Software is pervasive, which rai ses t he value of testing it. The machines

themselves are hundreds of times more powerful, and smaller, than those

early devices, and today’s concept of ‘‘computer’’ is much broader and

more difficult to define. Televisions, telephones, gaming systems, and auto-

mobiles all contain computers and computer software, and in some cases

can even be considered computers themselves.

Therefore, the soft ware we write today potentially touches milli ons of

people, either enabling them to do their jobs effectively and efficiently, or

causing them untold frustration and costing them in the form of lost work

or lost business. This is not to say that software is more important today

than it was when the first edition of this book was published, but it is safe

to say that computers—and the software that drives them—certainly affect

more people and more businesses now than ever before.

1

C01 08/11/2011 11:29:16 Page 2

Software testing is easier, too, in some ways, because the array of soft-

ware and operating systems is much more sophisticated than in the past,

providing i ntri nsic, well-te sted routines that can be incorporated into

applications without the need for a programmer to develop them from

scratch. Graphical User Interfaces (GUIs), for example, can be built from a

development language’s libraries, and since they are preprogrammed ob-

jects that have been debugged and tested previously, the need for testing

them as part of a custom application is much reduced.

And, despite the plethora of software testing tomes available on the

market today, many developers seem to have an attitude that is counter

to extensive testing. Better development tools, pretested GUIs, and the

pressure of tight deadl ines in an ever more complex development envi-

ronment can lead to avoidance o f all but the most obvious te sting

protocols. Whereas low-level impacts of bugs may only inconvenie nce

the end user, the worst impacts can result in large financial loses, o r e ven

cause harm to people. The procedures in this book can help designers,

developers, and project m anagers better understand the value of compre-

hensive testing, and provide guidelines to help them achieve required

testing goals.

Software testing is a process, or a series of processes, designed to make

sure computer code does what it was designed to do and, conversely, that it

does not do anything unintended. Software should be predictable and con-

sistent, presenting no surprises to users. In this book, we will look at many

approaches to achieving this goal.

Now, before we start the book, we’d like you to take a short exam. We

want you to write a set of test cases—specific sets of data—to test properly

a relatively simple program. Create a set of test data for the program—data

the program must handle correctly to be considered a successful program.

Here’s a description of the program:

The program reads three integer values from an input dialog. The

three values represent the lengths of the sides of a triangle. The pro-

gram displays a message that states whether the triangle is scalene,

isosceles, or equilateral.

Remember that a scalene triangle is one where no two sides are equal,

whereas an isosceles triangle h as two equal sides, and a n equilatera l

triangle has three sides of equal length. Moreover, the angles opposite the

2 The Art of Software Testing

C01 08/11/2011 11:29:16 Page 3

equal sides in an isosceles triangle also are equal (it also follows that the

sidesoppositeequalanglesinatriangleareequal),andallanglesinan

equilateral triangle are equal.

Evaluate your set of test cases by using it to a nswer the followi ng

questions. Give yourself one point for each yes answer.

1. Do you have a test c ase that represents a valid scalene triangle?

(Note that test cases such as 1, 2, 3 and 2, 5, 10 do not warrant a yes

answer because a triangle having these dimensions is not valid.)

2. Do you have a test case that represents a valid equilateral triangle?

3. Do you have a test case that represents a valid isosceles triangle?

(Note that a test case representing 2, 2, 4 would not count because it

is not a valid triangle.)

4. Do you have at least three test cases that represent valid isosceles

triangles such that you have tried all three permutations of two equal

sides (such as, 3, 3, 4; 3, 4, 3; and 4, 3, 3)?

5. Do you have a test case in which one side has a zero value?

6. Do you have a test case in which one side has a negative value?

7. Do you have a test case with three integers greater than zero such that

the sum of two of the numbers is equal to the third? (That is, if the

program said that 1, 2, 3 represents a scalene triangle, it would contain

abug.)

8. Do you have at least three test cases in category 7 such that you have

tried all three permutations where the length of one side is equal to

the sum of the lengths of the other two sides (e.g., 1, 2, 3; 1, 3, 2; and

3, 1, 2)?

9. Do you have a test case with three integers greater than zero such that

the sum of two of the numbers is less than the third (such as 1, 2, 4 or

12, 15, 30)?

10. Do you have at least three test cases in category 9 such that you have

tried all three permutations (e.g., 1, 2, 4; 1, 4, 2; and 4, 1, 2)?

11. Do you have a test case in which all sides are zero (0, 0, 0)?

12. Do you have at least one test case specifying noninteger values

(such as 2.5, 3.5, 5.5)?

13. Do you have at least one test case specifying the wrong number of

values (two rather than three integers, for example)?

14. For each test case did you specify the expected output from the

program in addition to the input values?

A Self-Assessment Test 3

C01 08/11/2011 11:29:16 Page 4

Of course, a set of test cases that satisfies these conditions does not guar-

antee that you will find all possible errors, but since questions 1 through

13 represent errors that actually have occurred in different versions of this

program, an a dequate test of this program should expose at l east these

errors.

Now, before you become concerned about your score, consider this: In

our experience, highly qualified professiona l programmers score, on the

average, only 7.8 out of a pos sible 14. If you’ve done better, congratula-

tions; if not, we’re here to help.

The point of the exercise is to illustrate that the testing of even a trivial

program such as this is not an easy task. Given this is true, consider the diffi-

culty of testing a 100,000-statement air traffic contr ol system, a compiler, or

even a mundane payroll program. Testing also becomes more difficult with

the object-oriented languages, such as Java and Cþþ. For example, your test

cases for applications built with these languages must expose errors associ-

ated with object instantiation and memory management.

It might seem from working with this example that thoroughly testing a

complex, real-world program would be impossible. Not so! Although the

task can be daunting, adequate program testi ng is a very necessary—and

achievable—part of software development, as you will learn in this book.

4 The Art of Software Testing

C02 08/25/2011 11:54:11 Page 5

2

The Psychology

and Economics of

Software Testing

S

oftware testing is a technical task, yes, but it also involves some impor-

tant considerations of economics and human psychology.

In an ideal world, we would want to test every possible permutation of a

program. In most cases, however, this simply is not possible. Even a seem-

ingly simple program can have hundreds or thousands of possible input

and output combinations. Creating test cases for all of these possibilities is

impractical. Complete testing of a complex a pplication would take too

long and require too many human resources to be economically feasible.

In addition, the software tester needs the proper attitude (perhaps

‘‘vision’’ is a better word) to successfully test a software ap plication. In

some cases, the tester’s attitude may be more important than the actual pro-

cess itself. Therefore, we will start our discussion of software testing with

these issues before we delve into the more technical nature of the topic.

The Psychology of Testing

One of the primary causes of poor application testing is the fact that most

programmers begin with a false definition of the term. They might say:

‘‘Testing is the process of demonstrating that errors are not present.’’

‘‘The purpose of testing is to show that a program performs its intended

functions correctly.’’

‘‘Testing is the process of e stablishing confidence that a program d oes

what it is supposed to do.’’

5

C02 08/25/2011 11:54:11 Page 6

These definitions are upside down.

When you test a program, you want to add some value to it. Adding

value through testing means raising the quality or reliability of the program.

Raising the reliability of the program means finding and removing errors.

Therefore, don’t test a program to show that it works; rather, start with

the assumption that the program contai ns errors (a valid assumption for

almost any program) and then test the program to find as many of the

errors as possible.

Thus, a more appropriate definition is this:

Testing is the process of executing a program with the intent of find-

ing errors.

Although this may sound like a game of subtle semantics, it’s really an

important distinction. Understanding the true definition of software test-

ing can make a profound difference in the success of your efforts.

Human be ings tend to be hi ghly goal-orient ed , and establis hin g the

proper goal has an important psychological effect on them. If our goal is to

demonstrate that a program has no errors, then we will be steered sub-

consciously toward this goal; that is, we tend to select test data that have a

low probability of causing the program to fail. On the other hand, i f our

goal is to demonstrate that a program has errors, our test data will have a

higher probability of finding errors. The latter approach will add more

value to the program than the former.

This definition of testing has myriad implications, many of which are

scattered throughout this book. For instance, it implies that testing is a

destructive, even sadistic, process, which explains why most people find it

difficult. That may go against our grain; with good fortune, most of us have

a constructive, rather than a destructive, outlook on life. Most people are

inclined toward making objects rather than ripping them apart. The defini-

tion also has implications for how test cases (test data) should be designed,

and who should and who should not test a given program.

Another way of reinfor cing the proper definition of testing is to analyze

the use of the words ‘‘successful’’ and ‘‘unsuccessful’’—in particular, their use

by project managers in categorizing the results of test cases. Most project

managers refer to a test case that did not find an error a ‘ ‘successful test run,’’

whereas a test that discovers a new error is usually called ‘‘unsuccessful.’ ’

Once again, this is upside down. ‘‘Unsuccessful’’ denotes something un-

desirable or disappointing. To our way of thinking, a well-constructed and

6 The Art of Software Testing

C02 08/25/2011 11:54:11 Page 7

executed software test is successful when it finds errors that can be fixed.

That same test is also successful when it eventually establishes that there

are no more errors to be found. The only unsuccessful test is one that does

not properly examine the software; and, in the majority of cases, a test that

found no errors likely would be considered unsuccessful, since th e con-

cept of a program without errors is basically unrealistic.

A test case that finds a new error can hardly be considered unsuccessful;

rather, it has proven to be a valuable investment. An unsuccessful test case

is one that causes a program to produce the correct result without finding

any errors.

Consider the analogy of a person visiting a doctor because of an overall

feeling of malaise. If the doctor runs some laboratory tests that do not locate

the problem, we do not call the laboratory tests ‘ ‘successful’’; they were un-

successful tests in that the patient’s net worth has been reduced by the expen-

sive laboratory fees, the patient is still ill, and the patient may question the

doctor’s ability as a diagnostician. However, if a laboratory test determines

that the patient has a peptic ulcer, the test is successful because the doctor

can now begin the appropriate treatment. Hence, the medical profession

seems to use these words in the proper sense. The analogy, of course, is that

we should think of the program, as we begin testing it, as the sick patient.

A second problem with such definitions as ‘‘testing is the process of

demonstrating that errors are not present’’ is that such a goal is impossible

to achieve for virtually all programs, even trivial programs.

Again, psychological studies tell us that people p erform poorly when

they set out on a task that they know to be infeasible or impossib le. For

in stance, if you were instructed to solve the crossword p uzzle in the

Sunday New York Times in 15 minutes, you probably would achieve little,

if any, progress after 10 minutes because, if you are like most people, you

would be resigned to the fact that the task seems impossible. If you were

asked for a solution in four hours, however, we could reasonably expect to

see more progress in the initial 10 minutes. Defining program testing as the

process of uncovering errors in a program makes it a feasible task, thus

overcoming this psychological problem.

A third problem with the common definitions such as ‘‘testing is the

process of demonstrating that a program does what it is supposed to do’’ is

that programs that do what they are supposed to do still can contain

errors. That is, an error is clearly present if a program does not do what it is

supposed to do; but errors are also present if a program does what it is not

supposed to do. Consider the triangle program o f Cha pter 1. Even if we

The Psychology and Economics of Software Testing 7

C02 08/25/2011 11:54:11 Page 8

could demonstrate that the program correctly distinguishes among all sca-

lene, isosceles, and equilate ral triangles, t he program still w ould be i n

error if it does something it is not supposed to do (such as representing 1,

2, 3 as a scalene triangle or saying that 0, 0, 0 represents an equilateral

triangle). We are more likely to discover the latter class of errors if we

view program testing as the process of finding errors than if we view it as

the process of showing that a program does what it is supposed to do.

To summarize, program testing is more properly viewed as the destruc-

tive process of trying to find the errors in a program (whose presence is

assumed). A successful test case is one that furthers progress in this direc-

tion by causing the program to fail. Of course, you eventually want to use

program testing to establish some degree of confidence that a program

does what it is supposed to do and does not do what it is not supposed to

do, but this purpose is best achieved by a diligent exploration for errors.

Consider someone approaching you with the claim that ‘‘my program is

perfect’’ (i.e., error free). The best way to establish some confidence in this

claim is to try to refute it, that is, to try to find imperfections rather than

just confirm that the program works correctly for some set of input data.

The Economics of Testing

Given our definition of program testing, an appropriate next step is to de-

termine whether it is possible to test a program to find all of its errors. We

will show you that the answer is negative, even for trivial programs. In

general, it is impractical, often impossible, to find all the errors in a pro-

gram. This fundamental problem will, in turn, have implications for the

economics of testing, assumptions that the tester will have to make about

the program, and the manner in which test cases are designed.

To combat the challenges associated with testing economics, you should

establish some strategies befor e beginning. Two of the most prevalent strate-

gies include black-box testing and white-box testing, which we will explore

in the next two sections.

Black-Box Testing

One important testing strategy is black-box testing (also known as data-

driven or input/output-driven testing). To use this method, view the pro-

gram as a black box. Your goal is to be completely unconcerned about the

8 The Art of Software Testing

C02 08/25/2011 11:54:11 Page 9

internal behavior and structure of the program. Instead, concentrat e on

finding circumstances in which the program does not behave according to

its specifications.

In this approach, test data are derived solely from the specifi cations

(i.e., without taking adva ntage of know ledge of the i nternal structure of

the program).

If you want to use this approach to find all errors in the program, the

criterion is exhaustive input testing, making use of every possible input con-

dition as a test case. Why? If you tried three equilateral-triangle test cases

for the triangle program, that in no way guarantees the correct detection of

all equilateral triangles. The program could contain a special check for val-

ues 3842, 3842, 3842 and denote such a triangle as a scalene triangle.

Since the program is a black box, the only way to be sure of detecting the

presence of such a statement is by trying every input condition.

To test the triangle program exhaustively, you would have to create test

cases for all valid triangles up to the maximum integer size of the develop-

ment language. This in itself is an astronomical number of test cases, but it

is in no way exhaustive: It would not find errors where the program said

that 3, 4, 5 is a scalene triangle and that 2, A, 2 is an isosceles triangle.

To be sure of finding all such errors, you have to te st using not only all

valid inputs, but all possible inputs. H ence , to te st th e tr iang le program

exhaustively, you would have to produce virtually an i nfinite number of

test cases, which, of course, is not possible.

If this sounds difficult, exhaustive input testing of larger programs is even

more problematic. Consider attempting an exhaustive black-box test of a

Cþþ compiler. Not only would you have to create test cases representing all

valid Cþþ programs (again, virtually an infinite number), but you would

have to create test cases for all invalid Cþþ programs (an infinite number)

to ensure that the compiler detects them as being invalid. That is, the com-

piler has to be tested to ensure that it does not do what it is not supposed to

do—for example, successfully compile a syntactically incorrect program.

The problem is even more onerous for transaction-base programs such

as database applications. For example, in a database application such as an

airline reservation system, the execution of a transaction (such as a data-

base query or a reservation for a plane flight) is dependent upon what hap-

pened in previous transactions. Hence, not only would you have to try all

unique valid and invalid t ransact ions, but also al l p ossible sequences of

transactions.

The Psychology and Economics of Software Testing 9

C02 08/25/2011 11:54:12 Page 10

This discussion shows that exhaustive input testing is impossible. Two

important implications of this: (1) You cannot test a program to guarantee

that it is error free; and (2) a fundamental consideration in program testing

is one of economics. Thus, since exhaustive testing is out of the question,

the objective should be to maximize the yield on the testing investment by

maximizing the number of errors found by a finite number of test cases.

Doing so will involve, among other things, being able to peer inside the

program and make certain reasonable, but not airtight, assumptions about

the program (e.g., if the triangle program detects 2, 2, 2 as an equilateral

triangle, it seems reasonable that it will do the same for 3, 3, 3). This will

form part of the test case design strategy in Chapter 4.

White-Box Testing

Another testing strategy, white-box (or logic-driven) testing, permits you to

examine t he internal structure of the prog ram. This strategy derives test

data from an examination of the program’s logic (and often, unfortunately,

at the neglect of the specification).

The goal at this point is to establish for this strategy the analog to exhaus-

tive input testing in the black-box approach. Causing every statement in the

program to execute at least once might appear to be the answer, but it is not

difficult to show that this is highly inadequate. Without belaboring the point

here, since this matter is discussed in gre ater depth in Chapter 4, the analog

is usually considered to be exhaustive path testing. That is, if you execute, via

test cases, all possible paths of control flow through the program, then possi-

bly the program has been completely tested.

There are two flaws in this statement, however. One is that the number

of unique logic paths through a program could be astronomically large. To

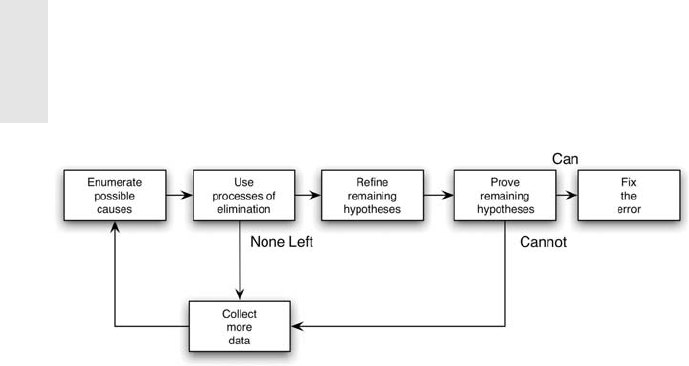

see this, consider the trivial program represented in Figure 2.1. The dia-

gram is a control-flow graph. Each node or circle represents a segment of

statements that execute sequentially, possibly terminating with a branching

statement. Each edge or arc represents a transfer of control (branch) be-

tween segments. The diagram, then, depicts a 10- to 20-statement program

consisting of a

DO loop that iterates up to 20 times. Within the body of the

DO loop is a set of nested IF statements. Determining the number of unique

logic paths is the same as determining the total number of unique ways of

moving from point a to point b (assuming that all decisions in the program

are independent from one another). This number is approximately 10

14

,or

10 The Art of Software Testing

C02 08/25/2011 11:54:12 Page 11

100 trillion. I t is computed from 5

20

þ 5

19

þ ...5

1

, where 5 is the

number of paths through the loop body. Most people have a difficult time

visualizing such a number, so consider it this way: If you could write, exe-

cute, and verify a test case every five minutes, it would take approximately

1 billion years to try every path. If you were 300 times faster, completing a

test once per second, you could complete the job in 3.2 million years, give

or take a few leap years and centuries.

Of course, in actual programs every decision is not independent from

every other decision, meaning that the number of possible execution paths

would be somewhat fewer. On the other hand, actual programs are much

larger than the simple program depicted in Figure 2.1. Hence, exhaustive

path testing, like exhaustive input testing, appears to be impractical, if not

impossible.

FIGURE 2.1 Control-Flow Graph of a Small Program.

The Psychology and Economics of Software Testing 11

C02 08/25/2011 11:54:12 Page 12

The second flaw in the statement ‘‘exhaustive path testing means a com-

plete test’’ is that every path in a program could be tested, yet the program

might still be loaded with errors. There are three explanations for this.

The first is that an exhaustive path test in no way guarantees that a pro-

gram matches its specification. For example, if you were asked to write an

ascending-order sorti ng routine but mistakenly produced a desc end ing-

order sorting routine, exhaustive path testing would be of little value; the

program still has one bug: It is the wrong program, as it does not meet the

specification.

Second, a program may be incorrect because of missing paths. Exhaustive

path testing, of course, would not detect the absence of necessary paths.

Third, an exhaustive path test might not uncover data-sensitivity errors.

There are many examples of such errors, but a simple one should suffice.

Suppose that in a program you have to compare two numbers for conver-

gence, that is, to see if the difference between the two numbers is less than

some predetermined value. For example, you might write a Java

IF state-

ment as

if (a-b<c)

System.out.println("a-b<c");

Of course, the statement contains an error because it should compare c

to the absolute value of a-b. Detection of this error, however, is dependent

upon the values used for

a and b and would not necessarily be detected by

just executing every path through the program.

In conclusion, although exhaustive input testing is superior to exhaus-

tive p ath tes ting, neither proves to be useful because both are infe asible.

Perhaps, then, there a re ways of combining elements of black-box and

white-box testing to derive a reasonable, but not airtight, testing strategy.

This matter is pursued further in Chapter 4.

Software Testing Principles

Continuing with the major premise of this chapter, that the most impor-

tant considerations in software testing are issues of psychology, we can

identify a set of vital testing principles or guidelines. Most of these princi-

ples may seem obvious, yet they are all too often overlooked. Table 2.1

summarizes these i mporta nt principle s, and ea ch is d iscusse d in more

detail in the paragraphs that follow.

12 The Art of Software Testing

C02 08/25/2011 11:54:12 Page 13

Principle 1: A necessar y part of a test ca se is a d efini tion of the

expected output or result.

This principle, though obvious, when overlooked is the cause of

one of the most frequent mistakes in program testing. Again, it is

something that is based on human psychology. If the expected result

of a t est case has not been predefi ned, cha nces are th at a plausible,

but erroneous, result will be interpreted as a correct result because of

the phenomenon of ‘‘the eye seeing w hat it wants to see.’’ In other

words, in spite of the proper destructive definition of testing, there is

still a subconscious desire to see the correct result. One way of

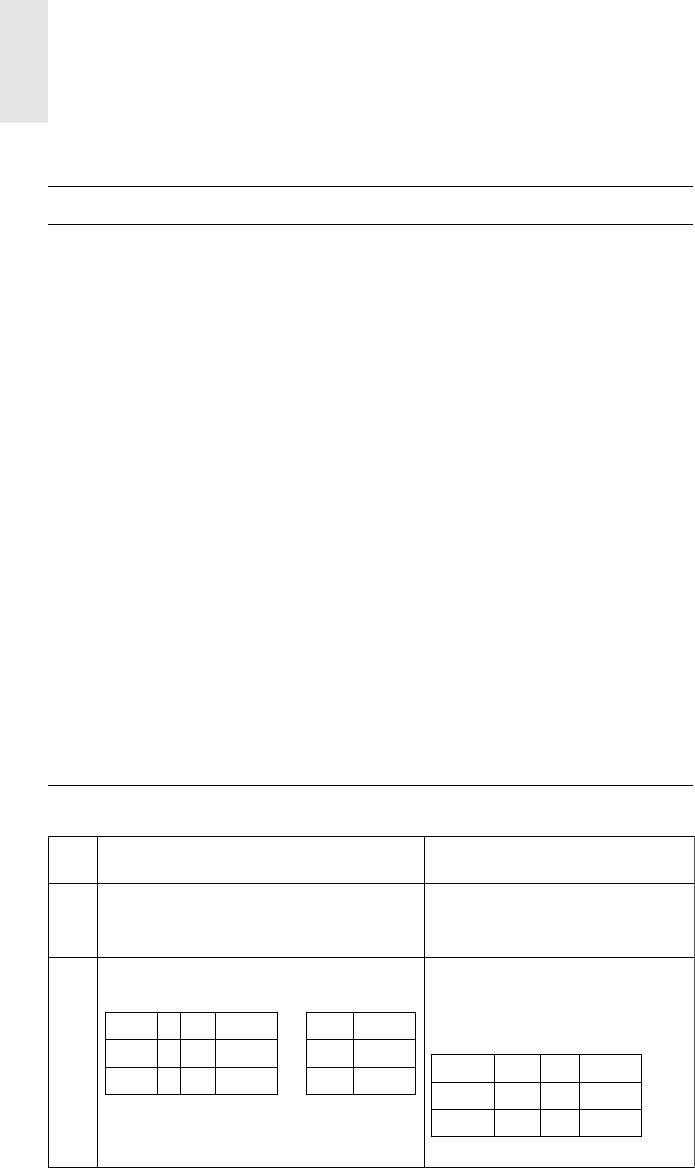

TABLE 2.1 Vital Program Testing Guidelines

Principle

Number Principle

1 A necessary part of a test case is a definition of the expected

output or result.

2 A programmer should avoid attempting to test his or her own

program.

3 A programming organization should not test its own programs.

4 Any testing process should include a thorough inspection of the

results of each test.

5 Test cases must be written for input conditions that are invalid

and unexpected, as well as for those that are valid and expected.

6 Examining a program to see if it does not do what it is supposed

to do is only half the battle; the other half is seeing whether the

program does what it is not supposed to do.

7 Avoid throwaway test cases unless the program is truly a

throwaway program.

8 Do not plan a testing effort under the tacit assumption that no

errors will be found.

9 The probability of the existence of more errors in a section of a

program is proportional to the number of errors already found in

that section.

10 Testing is an extremely creative and intellectually challenging

task.

The Psychology and Economics of Software Testing 13

C02 08/25/2011 11:54:12 Page 14

combating this is to encourage a detailed examination of all output

by precisely spelling out, in advance, the expected output of the pro-

gram. Therefore, a test case must consist of two components:

1. A description of the input data to the program.

2. A precise description of the correct output of the program for

that set of input data.

A problem may b e characterized as a fact or group of facts for

which we have no acceptable explanation, that seem unusual, or that

fail to fit in with our expectations or preconceptions. It should be

obvious that some prior beliefs are required if anything is to appear

problematic. If there are no expectations, there can be no surprises.

Principle 2: A programmer should avoid attempting to test his or her

own program.

Any writer knows—or should know—that it’s a bad idea to at-

tempt to edit or proofread his or her own work. They know what the

piece is supposed to say, hence may not recognize when it says other-

wise. And they really don’t want to find errors in their own work. The

same applies to software authors.

Another problem arises with a change in focus on a software proj-

ect. After a programmer has constructively designed and coded a pro-

gram, it is extremely difficult to suddenly change perspective to look

at the program with a destructive eye.

As many homeowners know, removing wallpaper (a destructive

process) is not easy, but it is almost unbearably depressing if it was

your hands that hung the paper in the first place. Similarly, most pro-

grammers cannot effectively test their own programs because they

cannot bring themselv es to shift mental gears to attempt to expo se

errors. Furthermore, a programmer may subconsciously avoid find-

ing errors for fear of retribution from peers or a supervisor, a client,

or the owner of the program or system being developed.

In addition to these psychological issues, there is a second signifi-

cant problem: The program may contain errors due to the program-

mer’s misunderstanding of the problem statement or specification. If

this is the case, it is likely that the programmer will carry the same

misunderstanding into tests of his or her own program.

This does not mean that it is impossible for a programmer to test

his or her own program. Rather, it implies that testing is more effec-

tiveandsuccessfulifsomeoneelsedoesit.However,aswewill

14 The Art of Software Testing

C02 08/25/2011 11:54:13 Page 15

discuss in more detail in Chapter 3, developers can be valuable mem-

bers of the testing team when the program specification and the pro-

gram code itself are being evaluated.

Note that this argument does not apply to debugging (correcting

known errors); debugging is more efficiently performed by the origi-

nal programmer.

Principle 3: A programming organization should not test its own

programs.

The argument here is similar to that made in the previous princi-

ple. A project or programming organization is, in many senses, a liv-

ing organization with psychological problems similar to those of

individual programmers. Furthermore, in most environments, a pro-

gramming organization or a project manager is largely measured on

the ability to produce a program by a given date and for a certain cost.

One reason for this is that it is easy to measure time and cost objec-

tives, whereas it is extremely difficult to quantify the reliability of a

program. Therefore, it is difficult for a programming organization to

be objective in testing its own programs, because the testing process,

if approached with the proper definition, may be viewed as decreasing

the probability of meeting the schedule and the cost objectives.

Again, this does not say that it is impossibl e for a programming

organization to find some of its errors, because organizations do

accomplish this with some degree of success. Rather, it implies that it

is more economical for testing to be performed by an objective, inde-

pendent party.

Principle 4: Any testing process should include a thorough inspection

of the results of each test.

This is probably the most obvious principle, but again it is some-

thing that is o ften overlooked. We’v e seen nume rous experiments

that show many subjects failed to detect certain errors, even when

symptoms of those errors were clearly observable on the output list-

ings. Put another way, errors that are found in later tests were often

missed in the results from earlier tests.

Principle 5: Test cases must be written for input conditions that are

invalid and unexpected, as well as for those that are valid

and expected.

There is a natural tendency when testing a program to concentrate

on the valid and expected input conditions, to the neglect of the

The Psychology and Economics of Software Testing 15

C02 08/25/2011 11:54:13 Page 16

invalid and unexpected conditions. For instance, this tendency fre-

quently appears in the testing of the triangle program in Chapter 1.

Few people, for instance, feed the program the numbers 1, 2, 5 to

ensure that the program does not erroneously interpret this as an

equalateral triangle instead of a scalene triangle. Also, many errors that

are suddenly discovered in production software turn up when it is used

in some new or unexpected way. It is hard, if not impossible, to define

all the use cases for software testing. Ther efor e, test cases represent ing

unexpected and invalid input conditions seem to have a higher err or-

detection yield than do test cases for valid input conditions.

Principle6:Examiningaprogramtoseeifitdoesnotdowhatitissup-

posed to do is only half the battle; the other half is seeing

whether the program does what it is not supposed to do.

This is a corollary to the previous principle. Programs must be

examined for unwanted side effects. For instance, a payroll program

that produces the correct paychecks is still an erroneous program if it

also produces extra checks for nonexistent employees, or if it over-

writes the first record of the personnel file.

Principle 7: Avoid throwaway test cases unless the program is truly a

throwaway program.

This problem is seen most often with interactive systems to test

programs. A common practice is to sit at a terminal and invent test

cases on the fly, and then send these test cases through the program.

The major issue is that test cases represent a valuable investment

that, in this environment, disappears after the testing has been com-

pleted. Whenever the program has to be tested again (e.g., after cor-

recting an error or making an improvement), the test cases must be

reinvented. More often than not, since this reinvention requires a

considerable amount of work, people tend to avoid it. Therefore, the

retest of the program is rarely as rigorous as the original test, mean-

ing that if the modification causes a previously functional part of the

program to fail, this error often goes undetected. Saving test cases

and running them again after changes to other components of the

program is known as regression testing.

Principle 8: Do not plan a t esting effort under the tacit assumption

that no errors will be found.

This is a mistake project managers often make and is a sign of the

use of the incorrect definition of testing—that is, the assumption that

16 The Art of Software Testing

C02 08/25/2011 11:54:13 Page 17

testing is the process of showing that the program functions correctly.

Once again, the definition of testing is the process of executing a pro-

gram with the intent of finding errors. And it should be obvious from

our previous discussions that it is impossible to deve lop a program

that is completely error free. Eve n after extensive testing and error

correction, it is safe to assume that errors still exist; they simply have

not yet been found.

Principle 9: The probability of the existence of more errors in a section

of a program is proportional to the number of errors al-

ready found in that section.

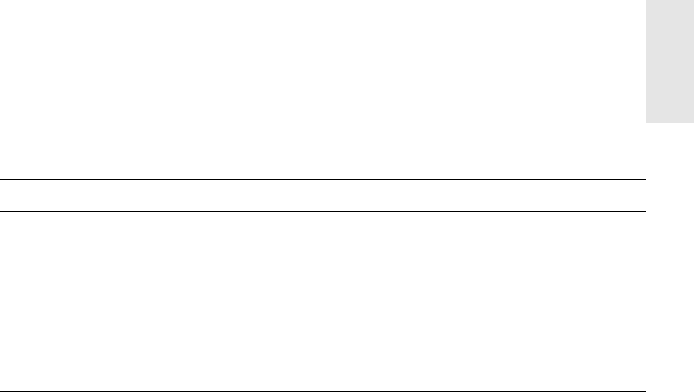

This phenomenon is illustrated in Figure 2.2. At first glance this

concept may seem nonsensical, but it is a phen ome non present in

many programs. For instance, if a program consists of two modules,

classes, or subroutines, A and B, and five errors have been found in

module A, and only one error has been foun d in module B,andif

module A has not been purposely subjected to a more rigorous test,

then this principle tells us that the likelihood of more errors in mod-

ule A is greater than the likelihood of more errors in module B.

Another way of stating this principle is to say that errors tend to

come in clusters and that , in the typic al program, some secti ons

seem to be much more prone to errors than other sections, although

nobody has supplied a good explanation of why this occurs. The phe-

nomenon is useful in that it gives us insight or feedback in the testing

process. If a particular section of a program seems to be much more

prone to errors than other sections, then this p henomenon tells us

FIGURE 2.2 The Surprising Relationship between Errors Remaining and

Errors Found.

The Psychology and Economics of Software Testing 17

C02 08/25/2011 11:54:13 Page 18

that, in terms of yield on our testing investment, additional testing

efforts are best focused against this error-prone section.

Principle 10: Testing is an extremely creative and intellectually chal-

lenging task.

It is probably true that the creativit y required in testing a large

program exceeds the creativity required in designing that program.

We already have seen that it is impossible t o test a program suffi-

ciently to guarantee the absence of all errors. Methodologies dis-

cussed later in this book help you develop a reasonable set of test

case s for a program, but these methodologies still require a signifi-

cant amount of creativity.

Summary

As you proceed through this book, keep in mind these important princi-

ples of testing:

Testing is the process of executing a program with the intent of find-

ing errors.

Testing is more successful when not performed by the developer(s).

A good test case is one that has a high probability of detecting an

undiscovered error.

A successful test case is one that detects an undiscovered error.

Successful testing includes carefully defining expected output as well

as input.

Successful testing includes carefully studying test results.

18 The Art of Software Testing

C03 08/26/2011 12:8:40 Page 19

3

Program Inspections,

Walkthroughs, and

Reviews

F

or many years, most of us in the programming community worked un-

der the assumptions that programs are written solely for machine exe-

cution, and are not intended for people to read, and that the only way to

test a program is to execute it on a machine. This attitude began to change

in the early 1970s through the efforts of program developers who first saw

the value in reading code as part of a comprehensive testing and debugging

regimen.

Today, not all testers of software applications read code, but the concept

of studying program code as part of a testing effort certainly is widely ac-

cepted. Several factors may affect the likelihood that a given testing and

debugging effort will include people actu ally reading program code: the

size or complexity of the application, the size of the development team ,

the timeline for application development (whether the schedule is relaxed

or intense, for example), and, of course, the background and culture of the

programming team.

For these reasons, we will discuss the process of noncomputer-based

testing ( ‘‘human testing’’) before we delve into the more traditional

computer-based testing techniques. Human testing techniques a re quite

effective in finding errors—so much so that every programming project

should use o ne or more of these te chniques. You should apply these

methods between the time the program is coded and when computer-

based testing begins. You also can develop and apply analogous methods

19

C03 08/26/2011 12:8:40 Page 20

at earlier stages in the programming process (such as at the end of each

design stage), but these are outside the scope of this book.

Before we begin the discussion of human testing techniques, take note

of this important point: Because the involvement of humans results in less

formal methods than mathematical proofs conducted by a computer, you

may feel skeptical that something so simple and informal can be useful.

Just the opposite is true. These informal techniques don’t get in the way of

successful testing; rather, they contribute substantially to productivity and

reliability in two major ways.

First, it is generally recognized that the earlier errors are found, the lower

the costs of corr ecting the errors and the higher the probability of correcting

them correctly. Second, programmers seem to experience a psychological

shift when computer-based testing commences. Internally induced pressur es

seem to build rapidly and there is a tendency to want to ‘‘fix this darn bug

as soon as possible.’’ Because of these pressures, pr ogrammers tend to make

more mistakes when correcting an error found during computer-based test-

ing than they make when correcting an error found earlier .

Inspections and Walkthroughs

The three primary human testing methods are code inspections, walk-

throughs and user (or usability) testing.Wecoverthefirsttwoofthese,

which are code-oriented methods, in this chapter. These methods can be

used at virtually any stage of software development, after an application is

deemed to be complete or as each module or unit is complete (see Chapter

5 for more information on module testing). We discuss user testing in

detail in Chapter 7.

The two code inspection methods have a lot in common, so we will dis-

cuss their similarities together. Their differences are enumerated in subse-

quent sections.

Inspections and w alkth roughs involve a team of peopl e reading or

visually inspecting a program. With either method, participants must

conduct some preparatory work. The climax is a ‘‘meeting of the minds,’’

at a participant conference. The objective of the meeting is to find errors

but not to find solutions to the errors—that is, to test, not debug.

Code inspections and wa lkt hroughs have been wi dely use d for some

time. In our opinion, the reason for their success is related to some of the

principles identified in Chapter 2.

20 The Art of Software Testing

C03 08/26/2011 12:8:40 Page 21

In a walkthrough, a group of developers—with three or four being an

optimal number—performs the review. Only one of the participants is the

author of the program. Therefore, the majority of program testing is con-

ducted by people other than the author, which follows testing principle 2,

which states that an individual is usually ineffective in testing his or her

own program. (Refer to Chapter 2, Table 2.1, and the subsequent discus-

sion for all 10 program testing principles.)

An inspection or walkthrough is an improvement over the older desk-

checking process (whereby a programmer reads his or her own program

before testing it). Inspections and walkthroughs are more effective, again

because people other t han the program’s author are involved in the

process.

Another advantage of walkthroughs, resulting in lower debugging

(error-correction) costs, is the fact that when an error is found it usually is

located precisely in the code as opposed to black bo x testing where you

only receive an unexpected result. Moreover, this process frequently

exposes a batc h of errors, allowing the errors to be corrected late r en

masse. Computer-based testing, on the other hand, normally exposes only

a symptom of the error (e.g., the program does not terminate or the

program prints a meaningless result), and errors are usually detected and

corrected one by one.

These human testing methods ge neral ly are effe ctive in finding from

30 to 70 percent of the logic-design and coding errors in typical programs.

They are not effective, however, in detecting high-level design errors, such

as e rrors made in the requiremen ts analysis process. Note that a success

rate of 30 to 70 percent doesn’t mean that up to 70 percent of all errors

might be found. Recall from Chapter 2 that we can never know the total

number of errors in a program. Thus, what this means is that these meth-

ods are effective in finding up to 70 percent of all errors found by the end

of the testing process.

Of course, a possible criticism of these statistics is that the human pro-

cesses find only the ‘‘easy’’ errors (those that would be trivial to find with

computer-based testing) and that the difficult, obscure, or tricky errors

can be found only by computer-based testing. However, some testers

using these techni ques have foun d that the human processes tend to

be more effe ctive than the computer-based testing processes in finding

certain types of errors, while the opposite is true for other types of

errors (e.g., uninitialized variables versus divide by zero errors).

Program Inspections, Walkthroughs, and Reviews 21

C03 08/26/2011 12:8:40 Page 22

The implicat ion is that inspections/walkth roughs and computer-ba sed

testing are complementary; error-detection efficiency will suffer if one or

the other is not present.

Finally, although these processes are invaluable for testing new pro-

grams, they are of equal, or even higher, value in testing modifications

to programs. In our experience, modifying an existing program is a process

that is more error prone (in terms of errors per stateme nt written) than

writing a new program. Therefore, prog ram modifications also should

be subjected to these testing processes as well as regression testing

techniques.

Code Inspections

A code inspection is a set of procedures and error-detection techniques

for group code reading. Most discussions of code inspections focus on the

procedures, forms to be filled out, and so on. Here, after a short summary

of the general procedure, we will focus on the actual error-detection

techniques.

Inspection Team

An insp ection team usually consists of four p eople. The fi rst of the four

plays the role of moderator, which in this context is tantamount to

that of a quality-control engineer. The moderator is expected to be a

competent programmer, but he o r she is not the author of the program

and need not be acquainted with the details of the program. Moderator

duties include:

Distributing materials for, and scheduling, the inspection session.

Leading the session.

Recording all errors found.

Ensuring that the errors are subsequently corrected.

Thesecondteammemberistheprogrammer.Theremainingteam

members usually are the program’s designer (if different from the program-

mer) and a test specialist. The specialist should be well versed in software

testing and familiar with the most common programming errors, which we

discuss later in this chapter.

22 The Art of Software Testing

C03 08/26/2011 12:8:40 Page 23

Inspection Agenda

Several days in advance of the inspection session, the moderator distrib-

utes the program’s listing and design specification to the other participants.

The participa nts are expected to familiarize themsel ves with the material

prior to the session. During the session, two activities occur:

1. The programmer narrates, statement by statement, the logic of the

program. During the discourse, other participants should raise ques-

tions, which should be pursued to determine whether errors exist. It

is likely that the programmer, rather than the other team members,

will find many of the errors identified during this narration. In other

words, the simple act of reading aloud a program to an audience seems

to be a remarkably effective error-detection technique.

2. The program is analyzed with respect to checklists of historically

common programming errors (such a checklist is discussed in the

next section).

The moderator is responsible for ensuring that the discussions proceed

along productive lines and that the participants focus their at tention on

finding errors, not correcting them. (The programmer corrects errors after

the inspection session.)

Upon the conclusion of the inspection session, the programmer is given

a list of the errors uncovered. If more than a few errors were found, or if

any of the errors require a substantial correction, the moderator might

make arrangements to reinspect the program after those errors have been

corrected. This subsequent list of errors is also analyzed, categorized, and

used to refine t he error checklist to improve the effectiveness of future

inspections.

As stated, this inspecti on process usually concentrates on discovering

errors, not correcting them. That said, some teams may find that when a

minor problem is discovered, two or three people, including the program-

mer responsible for the code, may propose design changes to handle this

special case. The discussion of this minor problem may, in turn, focus

the group’s attention on that particular area of the design. During the dis-

cussion of the best way to alter the design to handle this minor problem,

someone may notice a second problem. Now that the group has seen two

problems related to the same aspect of the design, comments likely will

Program Inspections, Walkthroughs, and Reviews 23

C03 08/26/2011 12:8:41 Page 24

come thick and fast, with interruptions every few sentences. In a few min-

utes, this whole area of the design could be thoroughly explored, and any

problems made obvious.

The time and location of the inspection should be planned to prevent all

outside interruptions. The optimal amount of time for the inspection ses-

sion appears to be from 90 to 120 minutes. The session is a mentally taxing

experience, thus longer sessions tend to be less productive. Most inspec-

tions proceed at a rate of approximately 150 program statements per hour.

For that reason, large programs should be examined over multiple inspec-

tions, each dealing with one or several modules or subroutines.

Human Agenda

Note that for the inspection process to be effective, the testing group must

adopt an appropriate attitude. If, for example, the programmer views the

inspection as an attack on his or her character and adopts a defensive pos-

ture, the process will be ineffective. Rather, the programmer must a leave

his or her ego at the door and place the process in a positive and construc-

tive light, keeping in mind that the objective of the inspection is to find

errors in the program and, thus, improve the quality of the work. For this

reason, most people recommend that the results of an inspection be a

confidential matter, shared only among the participants. In particular, if

managers somehow make use of the inspection results (to assume or imply

that the programmer is inefficient or incompetent, for example), the pur-

pose of the process may be defeated.

Side Benefits of the Inspection Process

The inspection process has several beneficial side effects, in addition to its

main effect of finding errors. For one, the programmer usually receives

valuable feedback co ncern ing programming sty le, cho ice of a lgori thms,

and programming techniques. The other participants gain in a similar way

by being exposed to another programmer’s errors and programming style.

In general, this type of software testing helps reinforce a team approach to

this particular project and to projects that involve these partic ipants in

general. Reducing the potential for the evolution of an adversarial relation-

ship, in favor of a cooperative, team approach to projects, can lead to more

efficient and reliable program development.

24 The Art of Software Testing

C03 08/26/2011 12:8:41 Page 25

Finally, the inspection process i s a way of identifying early the most

error-prone sections of the program, helping to focus attention more

directly on these sections during the computer-based testing p rocesses

(number 9 of the testing principles given in Chapter 2).

An Error Checklist for Inspections

An important p art of the inspection process is t he use of a che cklist to

examine the program for common errors. Unfortunately, some checklists

concentrate more on issues of style than on errors (e.g., ‘‘Are comments

accurate and meaningful?’’ and ‘‘Are

if-else code blocks, and do-while

groups aligned ?’’), and the er ror check s are to o neb ulous to be useful

(such as, ‘‘Does the code meet the design requirements?’’). The checklist in

this section, divided into six categories, was compiled after many years of

study of software errors. It is largely language-independent, meaning that

most of the errors can occur with any programming language. You may

wish to supplement this list with errors peculiar to your programming lan-

guage and with errors detected after completing the inspection process.

Data Reference Errors

Does a referenced variable have a value that is unset or uninitialized?

This probably is the most frequent programming error, occurring in

a wide variety of circumstances. For each reference to a data item

(variable, array element, field in a structure), attempt to ‘‘prove’’ in-

formally that the item has a value at that point.

For all array references, is each subscript value within the defined

bounds of the corresponding dimension?

For all array references, does each subscript have an integ er value?

This is not necessarily an error in all languages, but, in general,

working with noninteger array references is a dangerous practice.

For all references through pointer or reference variables, is the refer-

enced memory currently allocated? This is known as the ‘‘dangling

reference’’ problem. It occurs in situations where the lifetime of a

pointer is greater t han th e l ife time of the referenced memory. One

instance occurs where a pointer references a local variable within

a procedure, the pointer value is assigned to an output parameter

or a global variable, the procedure returns (freeing the referenced

Program Inspections, Walkthroughs, and Reviews 25

C03 08/26/2011 12:8:41 Page 26

location), and later the program attempts to use the pointer value.

In a m anne r similar to checking f or the prior errors, try to prove

informally that, in each reference using a pointer variable, the refer-

enced memory exists.

When a memory area has alias names with differing attributes, does

the data value in this area have the correct attributes when refer-

enced via one of these names? Situations to look for are the use of

the

EQUIVALENCE statement in Fortran and the REDEFINES clause in

COBOL. As an example, a Fortran program contains a real variable

A

and an integer variable B; both are made aliases for the same memory

area by using an

EQUIVALENCE statement. If the program stores a

value into

A and then references variable B, an error is likely present

since the machine would use the floating-point bit representation in

the memory area as an integer.

Sidebar 3.1: History of COBOL and Fortran

COBOL and Fortran are older programming languages that h ave

fueled bus iness and scientific software development for generations

of computer hardware, operating systems and programmers.

COBOL (an acronym for COmmon Business Oriented Language)

first was defined about 1959 or 1960, and was designed to support

business application development on mainframe class computers.

The original specification included aspects of other existing languages

at the time. Big-name computer manufacturers and representatives of

the federal government participated in this effort to create a business-

oriented programming language that could run on a variety of hard-

ware and operating system platforms.

COBOL language standards have been reviewed and updated over

the years. By 2002, COBOL was available for most current operating

platforms and object-oriented versions supporting the .NET develop-

ment environment.

As the time of this writing, the latest version of COBOL is Visual

COBOL 2010.

Fortran (originally FORTRAN, but modern references generally

follow the uppercase/lowercase syntax) is a little older than COBOL,

26 The Art of Software Testing

C03 08/26/2011 12:8:41 Page 27

Does a variable’s v alue have a type or a ttribute other than what

the compiler expects? This situation might occur where a C or Cþþ

program reads a record into memory and ref erences it by using a

structure, but the physical representation of the record differs from

the structure definition.

Are there any explicit or implicit addressing problems if, on the com-

puter being used, the units of memory allocation are smaller than the

units of addressable memo ry? For instance, in some environments,

fixed-length bit strings do not necessarily begin on byte boundaries,

but address only point-to-byte boundaries. If a program computes

the address of a bit string and later refers to the string through this

address, the wrong memory location may be referenced. This situa-

tion also could occ ur when passing a bit-string argument to a

subroutine.

If pointer or reference variables are used, does the referenced mem-

ory location have the attributes the compiler expects? An example of

such an error is where a Cþþ pointer upon which a data structure is

based is assigned the address of a different data structure.

If a da ta structure is referenced in multip le procedures or subrou-

tines, is the structure defined identically in each procedure?

When indexing into a string, are the limits of the string off by one

in indexing operations or in subscript references to arrays?

with early specifications defined in the early to middle 1950s. Like

COBOL, Fortran was designed for specific types of mainframe applica-

tion development, but in the scientific and numerical management

arenas. The name derives from an existing IBM system at the time,

Mathematical FORmula TRANslating System. Although the original

Fortran contained only 32 statements, it marked a significant improve-

ment over assembly-level programming that preceded it.

The current version as of the publication date of this book is Fortran

2008, formally approved by the appropriate standard commit tees

in 2010. Like COBOL, the evolution of Fortran added support for a

broad range of hardware and operating system platforms. However,

Fortran is probably used more in current development—as well as

older system maintenance—than COBOL.

Program Inspections, Walkthroughs, and Reviews 27

C03 08/26/2011 12:8:41 Page 28

For object-oriented languages, are all inheritance requirements met

in the implementing class?

Data Declaration Errors

Have all variables been explicitly declared? A failure to do so is not

necessarily an error, but is, nevertheless, a common source of trou-

ble. For instance, if a program subroutine receives an array parame-

ter, and fails to define the parameter as an array (as in a

DIMENSION

statement), a reference to the array (such as C¼A(I) ) is interpreted

as a function call, leading to the machine’s attempting to execute the

array as a program. Also, if a variable is not explicitly declared in an

inner procedure or block, is it understood that the variable is shared

with the enclosing block?

If all attributes of a variable are not explicitly stated in the declara-

tion, are the defaults well understood? For instance, t he default

attributes received in Java are often a source of surprise when not

properly declared.

Where a variable is initialized in a declarative statement, is it prop-

erly initialized? In many languages, initialization of arrays and

strings is somewhat complicated and, hence, error prone.

Is each variable assigned the correct length and data type?

Is the initialization o f a variable consiste nt with its m emory type?

For instance, if a variable in a Fortran subroutine needs to be reini-

tialized each time the subroutine is called, it must be initialized with

an assignment statement rather than a

DATA statement.

Are there any variables with similar names (e.g., VOLT and VOLTS)?

This is not necessarily an error, but it should be seen as a warning

that the names may have been confused somewhere within the

program.

Computation Errors

Are there any computation s using variables having inconsistent

(such as nonarithmetic) data types?

Are there any mixed-mode computations? An example i s when

working with floating-point and integer variables. Such occurrences

are not necessarily errors, but they should be explored carefully to

ensure that the conversion rules of the language are understood.

28 The Art of Software Testing

C03 08/26/2011 12:8:41 Page 29

Consider the following Java snippet showing the rounding error that

can occur when working with integers:

int x ¼ 1;

int y ¼ 2;

int z ¼ 0;

z ¼ x/y;

System.out.println ("z ¼ " þ z);

OUTPUT:

z ¼ 0

Are there any c omputations using variables having the same data

type but of different lengths?

Is the data type of the target variable of an assignment smaller than

the data type or a result of the right-hand expression?

Is an overflow or underflow expression possible during the computa-

tion of an expression? That is, the end result may appear to have

valid value, but an intermediate result might be too big or too small

for the programming language’s data types.

Is it possible for the divisor in a division operation to be zero?

If the underlying machine represents variables in base-2 form, are

there any sequences of the resulting inaccuracy? That is,

10 0.1 is

rarely equal to 1.0 on a binary machine.

Where applicable, can the value of a variable go outside the mean-

ingful range? For example, statements assigning a value to the varia-

ble

PROBABILITY might be checked to ensure that the assigned value

will always be positive and not greater than 1.0.